OmniConsistency— 新加坡国立大学推出的图像风格迁移模型

时间:2025-05-30 | 作者: | 阅读:0OmniConsistency Explained

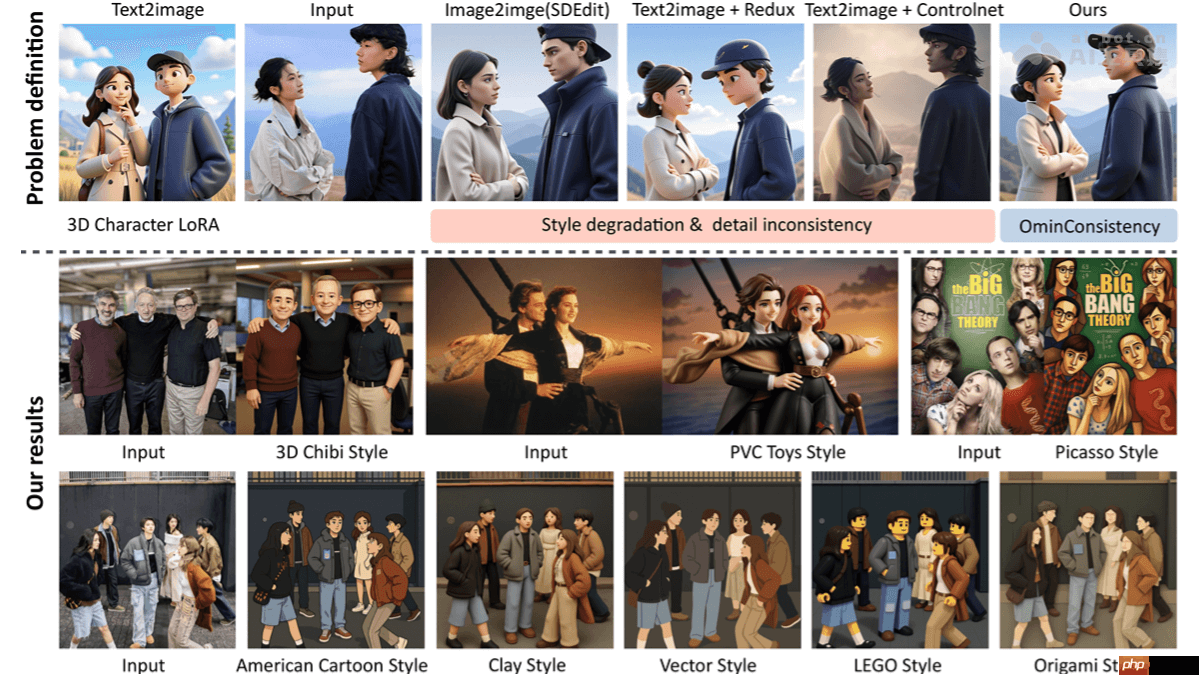

omniconsistency is an image style transfer model developed by the national university of singapore. it addresses the issue of consistency in stylized images across complex scenes. the model is trained on large-scale paired stylized data using a two-stage training strategy that decouples style learning from consistency learning. this ensures semantic, structural, and detail consistency across various styles. omniconsistency supports seamless integration with any style-specific lora module for efficient and flexible stylization effects. in experiments, it demonstrates performance comparable to gpt-4o, offering higher flexibility and generalization capabilities.

Key Features of OmniConsistency

Key Features of OmniConsistency

- Style Consistency: Maintains style consistency across multiple styles without style degradation.

- Content Consistency: Preserves the original image's semantics and details during stylization, ensuring content integrity.

- Style Agnosticism: Seamlessly integrates with any style-specific LoRA (Low-Rank Adaptation) modules, supporting diverse stylization tasks.

- Flexibility: Offers flexible layout control without relying on traditional geometric constraints like edge maps or sketches.

Technical Underpinnings of OmniConsistency

- Two-Stage Training Strategy: Stage one focuses on independent training of multiple style-specific LoRA modules to capture unique details of each style. Stage two trains a consistency module on paired data, dynamically switching between different style LoRA modules to ensure focus on structural and semantic consistency while avoiding absorption of specific style features.

- Consistency LoRA Module: Introduces low-rank adaptation (LoRA) modules within conditional branches, adjusting only the conditional branch without interfering with the main network's stylization ability. Uses causal attention mechanisms to ensure conditional tokens interact internally while keeping the main branch (noise and text tokens) clean for causal modeling.

- Condition Token Mapping (CTM): Guides high-resolution generation using low-resolution condition images, ensuring spatial alignment through mapping mechanisms, reducing memory and computational overhead.

- Feature Reuse: Caches intermediate features of conditional tokens during diffusion processes to avoid redundant calculations, enhancing inference efficiency.

- Data-Driven Consistency Learning: Constructs a high-quality paired dataset containing 2,600 pairs across 22 different styles, learning semantic and structural consistency mappings via data-driven approaches.

Project Links for OmniConsistency

- GitHub Repository: https://www.php.cn/link/771f0d31d334435279ea1ea02b2c660c

- HuggingFace Model Library: https://www.php.cn/link/c93f6bc00902863602e25adcda3b1565

- arXiv Technical Paper: https://www.php.cn/link/314426bd564599865c676dbb6dc198c4

- Online Demo Experience: https://www.php.cn/link/00ad4587c5c242e23703ec19d8495824

Practical Applications of OmniConsistency

- Art Creation: Applies various art styles such as anime, oil painting, and sketches to images, aiding artists in quickly generating stylized works.

- Content Generation: Rapidly generates images adhering to specific styles for content creation, enhancing diversity and appeal.

- Advertising Design: Creates visually appealing and brand-consistent images for advertisements and marketing materials.

- Game Development: Quickly produces stylized characters and environments for games, improving development efficiency.

- Virtual Reality (VR) and Augmented Reality (AR): Generates stylized virtual elements to enhance user experiences.

[Note: All images remain in their original format.]

福利游戏

相关文章

更多-

- 医保电子凭证怎么激活 医保电子凭证激活方法快速上手

- 时间:2025-05-31

-

- 孩子小离不开人?宝妈在家赚钱的3个选择!

- 时间:2025-05-31

-

- FLUX.1 Kontext— Black Forest Labs 推出的图像生成与编辑模型

- 时间:2025-05-31

-

- Anthropic年化收入达30亿美元,AI代码生成成主要增长动力

- 时间:2025-05-31

-

- 尊界S800上市 首发华为ADS 4.0 售70.8万至101.8万

- 时间:2025-05-31

-

- 曝大部分尊界S800用户选择顶配车型:一小时订单破千

- 时间:2025-05-31

-

- 豆包AI神操作!用发疯文学做热点图阅读量三天破万

- 时间:2025-05-31

-

- 5寸是多少厘米 5寸换算厘米的实用技巧

- 时间:2025-05-31

精选合集

更多大家都在玩

大家都在看

更多-

- 区块链合约平台:开启全球交易新纪元

- 时间:2025-05-31

-

- 魔兽世界索罗夫宝藏获取方法

- 时间:2025-05-31

-

- Venom币起源:解决交易痛点

- 时间:2025-05-31

-

- 《金铲铲之战》三冠冕无限爆金币攻略

- 时间:2025-05-31

-

- 魔兽世界博学者的罩衫怎么获取

- 时间:2025-05-31

-

- Smittix预售筹1430万,瞄准跨境支付

- 时间:2025-05-31

-

- 鸣潮2.2幽夜幻梦任务流程

- 时间:2025-05-31

-

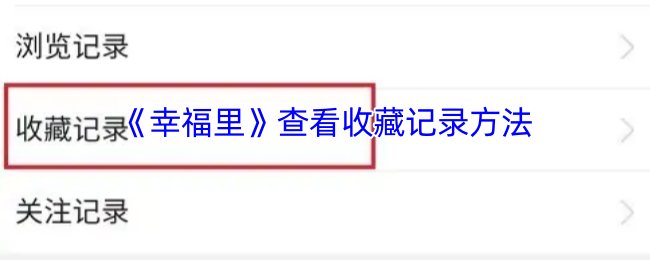

- 《幸福里》查看收藏记录方法

- 时间:2025-05-31