点云处理:基于Paddle2.0实现Kd-Networks对点云进行分类处理

时间:2025-07-21 | 作者: | 阅读:0本文介绍基于Paddle2.0实现Kd-Networks对点云分类处理。Kd-Networks引入Kd-Tree结构,将非结构化点云转为结构化数据,用1*1卷积近似KD算子。使用ShapeNet的.h5数据集,经数据处理、网络定义等步骤,训练20轮后,训练集准确率0.978,测试集0.9375,展示了其处理点云分类的效果。

点云处理:基于Paddle2.0实现Kd-Networks对点云进行分类处理

网络简介

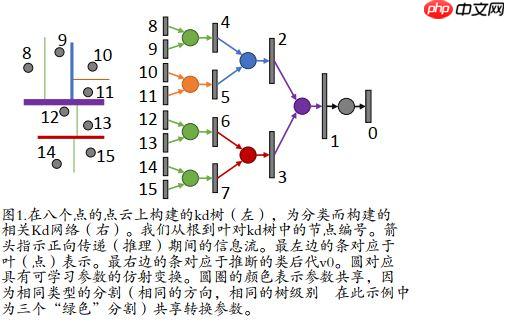

????Kd-Networks于2017发表于ICCV上。创新性地引入了Kd-Tree结构用于处理点云。????论文:Escape from Cells: Deep Kd-Networks for the Recognition of 3D Point Cloud Models ? ? ? ?

项目效果

训练二十轮后的准确率: ? ? ? ?

项目说明

①数据集

????本次用到的数据集是ShapeNet,储存格式是.h5文件。????.h5储存的key值分别为:????1、data:这一份数据中所有点的xyz坐标,????2、label:这一份数据所属类别,如airplane等,????3、pid:这一份数据中所有点所属的类型,如这一份数据属airplane类,则它包含的所有点的类型有机翼、机身等类型。 ? ? ? ?

②Kd-Networks 简介

????一、Kd-Networks最大的创新点在于引入了Kd-Tree这一结构,Kd-Tree将非结构化点云变成结构化数据,构建了处理非结构化点云的特征工程。????二、该项目利用1*1卷积近似实现KD算子的功能,因此在构建Kd-Networks网络时,构建的是一个类Kd-Tree的网络,也就是说Kd-Networks将数据处理中Kd-Tree化的过程复刻到网络上(例如在index_select处可以看到,index_select复刻的是数据处理时Kd-Tree化中二叉时切割的维度),网络因此类Kd-Tree。????

项目主体

①解压数据集、导入需要的库

In [?]!unzip data/data67117/shapenet_part_seg_hdf5_data.zip!mv hdf5_data dataset登录后复制 ? ?In [1]

import osimport numpy as npimport randomimport h5pyimport paddleimport paddle.nn as nnimport paddle.nn.functional as Ffrom tools.build_KDTree import build_KDTree登录后复制 ? ?

②数据处理

1、生成训练和测试样本的list

In [2]train_list = ['ply_data_train0.h5', 'ply_data_train1.h5', 'ply_data_train2.h5', 'ply_data_train3.h5', 'ply_data_train4.h5', 'ply_data_train5.h5']test_list = ['ply_data_test0.h5', 'ply_data_test1.h5']val_list = ['ply_data_val0.h5']登录后复制 ? ?

2、数据读取

????注:在数据读取这里,可以借助scipy.spatial中的cKDTree很快地生成kdTree。 ? ? ? ?

In [3]def pointDataLoader(mode='train'): path = './dataset/' BATCHSIZE = 64 MAX_POINT = 1024 LEVELS = (np.log(MAX_POINT) / np.log(2)).astype(int) datas = [] split_dims_v = [] points_v = [] labels = [] if mode == 'train': for file_list in train_list: f = h5py.File(os.path.join(path, file_list), 'r') datas.extend(f['data'][:, :MAX_POINT, :]) labels.extend(f['label']) f.close() elif mode == 'test': for file_list in test_list: f = h5py.File(os.path.join(path, file_list), 'r') datas.extend(f['data'][:, :MAX_POINT, :]) labels.extend(f['label']) f.close() else: for file_list in val_list: f = h5py.File(os.path.join(path, file_list), 'r') datas.extend(f['data'][:, :MAX_POINT, :]) labels.extend(f['label']) f.close() datas = np.array(datas) for i in range(len(datas)): split_dim, tree = build_KDTree(datas[i], depth=LEVELS) split_dim_v = [np.array(item).astype(np.int64) for item in split_dim] split_dims_v.append(split_dim_v) points_v.append(tree[-1].transpose(0, 2, 1)) split_dims_v = np.array(split_dims_v) points_v = np.array(points_v) labels = np.array(labels) print('==========load over==========') index_list = list(range(len(datas))) def pointDataGenerator(): if mode == 'train': random.shuffle(index_list) split_dims_v_list = [] points_v_list = [] labels_list = [] for i in index_list: split_dims_v_list.append(split_dims_v[i]) points_v_list.append(points_v[i].astype('float32')) labels_list.append(labels[i].astype('int64')) if len(labels_list) == BATCHSIZE: yield np.array(split_dims_v_list), np.array(points_v_list), np.array(labels_list) split_dims_v_list = [] points_v_list = [] labels_list = [] if len(labels_list) > 0: yield np.array(split_dims_v_list), np.array(points_v_list), np.array(labels_list) return pointDataGenerator登录后复制 ? ?

③定义网络

In [10]class KDNet(nn.Layer): def __init__(self, k=16): super(KDNet, self).__init__() self.conv1 = nn.Conv1D(3, 32*3, 1, 1) self.conv2 = nn.Conv1D(32, 64*3, 1, 1) self.conv3 = nn.Conv1D(64, 64*3, 1, 1) self.conv4 = nn.Conv1D(64, 128*3, 1, 1) self.conv5 = nn.Conv1D(128, 128*3, 1, 1) self.conv6 = nn.Conv1D(128, 256*3, 1, 1) self.conv7 = nn.Conv1D(256, 256*3, 1, 1) self.conv8 = nn.Conv1D(256, 512*3, 1, 1) self.conv9 = nn.Conv1D(512, 512*3, 1, 1) self.conv10 = nn.Conv1D(512, 128*3, 1, 1) self.fc = nn.Linear(128, k) def forward(self, x, split_dims_v): def kdconv(x, dim, featdim, select, conv): x = F.relu(conv(x)) x = paddle.reshape(x, (-1, featdim, 3, dim)) x = paddle.reshape(x, (-1, featdim, 3 * dim)) select = paddle.to_tensor(select) + (paddle.arange(0, dim) * 3) x = paddle.index_select(x, axis=2, index=select) x = paddle.reshape(x, (-1, featdim, int(dim/2), 2)) x = paddle.max(x, axis=-1) return x x = kdconv(x, 1024, 32, split_dims_v[0], self.conv1) x = kdconv(x, 512, 64, split_dims_v[1], self.conv2) x = kdconv(x, 256, 64, split_dims_v[2], self.conv3) x = kdconv(x, 128, 128, split_dims_v[3], self.conv4) x = kdconv(x, 64, 128, split_dims_v[4], self.conv5) x = kdconv(x, 32, 256, split_dims_v[5], self.conv6) x = kdconv(x, 16, 256, split_dims_v[6], self.conv7) x = kdconv(x, 8, 512, split_dims_v[7], self.conv8) x = kdconv(x, 4, 512, split_dims_v[8], self.conv9) x = kdconv(x, 2, 128, split_dims_v[9], self.conv10) x = paddle.reshape(x, (-1, 128)) x = F.log_softmax(self.fc(x)) return x登录后复制 ? ?

⑤训练

1、创建训练数据读取器

????注:由于训练数据预处理比较慢,所以先创建训练数据读取器(创建同时会对数据进行预处理),这样在训练时候直接导入就显得训练过程快很多(实际上并没有节省时间,只不过是为了调试方便和读者尝试,把训练数据读取器单独拿出来创建)。 ? ? ? ?

In [5]train_loader = pointDataLoader(mode='train')登录后复制 ? ? ? ?

==========load over==========登录后复制 ? ? ? ?

2、开始训练

In [11]def train(): model = KDNet() model.train() optim = paddle.optimizer.Adam(parameters=model.parameters(), weight_decay=0.001) epoch_num = 40 for epoch in range(epoch_num): for batch_id, data in enumerate(train_loader()): split_dims_v = data[0] points_v = data[1] labels = data[2] inputs = paddle.to_tensor(points_v) labels = paddle.to_tensor(labels) predict = [] for i in range(split_dims_v.shape[0]): predict.extend(model(inputs[i], split_dims_v[i])) predicts = paddle.stack(predict) loss = F.nll_loss(predicts, labels) acc = paddle.metric.accuracy(predicts, labels) if batch_id % 10 == 0: print(”epoch: {}, batch_id: {}, loss is: {}, accuracy is: {}“.format(epoch, batch_id, loss.numpy(), acc.numpy())) loss.backward() optim.step() optim.clear_grad() if epoch % 2 == 0: paddle.save(model.state_dict(), './model/KDNet.pdparams') paddle.save(optim.state_dict(), './model/KDNet.pdopt')if __name__ == '__main__': train()登录后复制 ? ?

⑥评估

In [12]def evaluation(): test_loader = pointDataLoader(mode='test') model = KDNet() model_state_dict = paddle.load('./model/KDNet.pdparams') model.load_dict(model_state_dict) for batch_id, data in enumerate(test_loader()): split_dims_v = data[0] points_v = data[1] labels = data[2] inputs = paddle.to_tensor(points_v) labels = paddle.to_tensor(labels) predict = [] for i in range(split_dims_v.shape[0]): predict.extend(model(inputs[i], split_dims_v[i])) predicts = paddle.stack(predict) acc = paddle.metric.accuracy(predicts, labels) print(”eval accuracy is: {}“.format(acc.numpy())) break;if __name__ == '__main__': evaluation()登录后复制 ? ? ? ?

==========load over==========eval accuracy is: [0.9375]登录后复制 ? ? ? ?

来源:https://www.php.cn/faq/1419403.html

免责声明:文中图文均来自网络,如有侵权请联系删除,心愿游戏发布此文仅为传递信息,不代表心愿游戏认同其观点或证实其描述。

相关文章

更多-

- nef 格式图片降噪处理用什么工具 效果如何

- 时间:2025-07-29

-

- 邮箱长时间未登录被注销了能恢复吗?

- 时间:2025-07-29

-

- Outlook收件箱邮件不同步怎么办?

- 时间:2025-07-29

-

- 为什么客户端收邮件总是延迟?

- 时间:2025-07-29

-

- 一英寸在磁带宽度中是多少 老式设备规格

- 时间:2025-07-29

-

- 大卡和年龄的关系 不同年龄段热量需求

- 时间:2025-07-29

-

- jif 格式是 gif 的变体吗 现在还常用吗

- 时间:2025-07-29

-

- hdr 格式图片在显示器上能完全显示吗 普通显示器有局限吗

- 时间:2025-07-29

大家都在玩

大家都在看

更多-

- 魔兽世界军团再临remix洛拉姆斯是你吗任务攻略

- 时间:2025-11-12

-

- 约翰的农场第四个结局揭秘

- 时间:2025-11-12

-

- 攻城掠地八级王朝有什么好处

- 时间:2025-11-12

-

- 淘宝店铺描述不符判罚,申诉要点与技巧大公开

- 时间:2025-11-12

-

- 三国望神州最强武将推荐

- 时间:2025-11-12

-

- 应用下载软件哪个好

- 时间:2025-11-12

-

- 淘宝店铺被判描述不符,如何撰写申诉信成功解除?

- 时间:2025-11-12

-

- Typora如何自动添加公式编号

- 时间:2025-11-12