【图像去噪】第七期论文复现赛——SwinIR

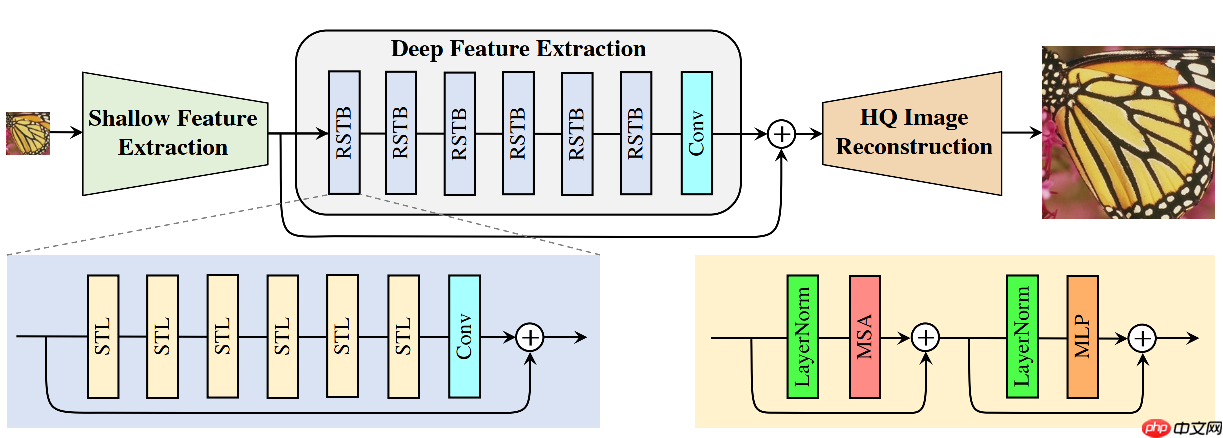

时间:2025-07-22 | 作者: | 阅读:0作者引入Swin-T结构应用于低级视觉任务,包括图像超分辨率重建、图像去噪、图像压缩伪影去除。SwinIR网络由一个浅层特征提取模块、深层特征提取模块、重建模块构成。重建模块对不同的任务使用不同的结构。浅层特征提取就是一个3×3的卷积层。深层特征提取是k个RSTB块和一个卷积层加残差连接构成。

论文复现——Low-level算法 SwinIR (去噪)

SwinIR: Image Restoration Using Swin Transformer——基于Swin Transformer的用于图像恢复的强基线模型

官方源码:https://github.com/JingyunLiang/SwinIR

复现地址:https://github.com/sldyns/SwinIR_paddle

脚本任务:https://aistudio.baidu.com/aistudio/clusterprojectdetail/3792518

PaddleGAN版本:https://github.com/PaddlePaddle/PaddleGAN/blob/develop/docs/zh_CN/tutorials/swinir.md

1. 简介

2. 复现精度

在 CBSD68 测试集上测试,达到验收最低标准34.32:

注:源代码八卡训练的iteration为 1,600,000,我们四卡只训练到了 426,000 就超时停止了.

3. 数据集、预训练模型、文件结构

3.1训练数据

DIV2K?(800 training images) +?Flickr2K?(2650 images) +?BSD500?(400 training&testing images) +?WED(4744 images)

已经整理好的数据:放在了?Ai Studio?里.

训练数据放在:data/trainsets/trainH?下

3.2测试数据

测试数据为 CBSD68:放在了?Ai Studio?里.

解压到:data/testsets/CBSD68

In [2]# 解压!cd data && unzip -oq -d testsets/ data147756/CBSD68.zip!cd data && unzip -oq -d trainsets/ data149405/trainH.zip登录后复制 ? ?In [3]

# 添加软链接!cd work && ln -s ../data/trainsets trainsets && ln -s ../data/testsets testsets登录后复制 ? ?

3.2 预训练模型

已放在文件夹?work/pretrained_models?下:

- 官方预训练模型,已转为 paddle 的,名为?005_colorDN_DFWB_s128w8_SwinIR-M_noise15.pdparams.

- 复现的模型,名为?SwinIR_paddle.pdparams.

3.3 文件结构

SwinIR_Paddle |-- data # 数据相关文件 |-- models # 模型相关文件 |-- options # 训练配置文件 |-- trainsets |-- trainH # 训练数据 |-- testsets |-- CBSD68 # 测试数据 |-- test_tipc # TIPC: Linux GPU/CPU 基础训练推理测试 |-- pretrained_models # 预训练模型 |-- utils # 一些工具代码 |-- config.py # 配置文件 |-- generate_patches_SIDD.py # 生成数据patch |-- infer.py # 模型推理代码 |-- LICENSE # LICENSE文件 |-- main_test_swinir.py # 模型测试代码 |-- main_train_psnr.py # 模型训练代码 |-- main_train_tipc.py # TICP训练代码 |-- README.md # README.md文件 |-- train.log # 训练日志登录后复制 ? ?

4. 环境依赖

PaddlePaddle >= 2.3.2

scikit-image == 0.19.3

In [1]!pip install scikit-image登录后复制 ? ? ? ?

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simpleCollecting scikit-image Downloading https://pypi.tuna.tsinghua.edu.cn/packages/2d/ba/63ce953b7d593bd493e80be158f2d9f82936582380aee0998315510633aa/scikit_image-0.19.3-cp37-cp37m-manylinux_2_12_x86_64.manylinux2010_x86_64.whl (13.5 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 13.5/13.5 MB 717.8 kB/s eta 0:00:0000:0100:01Requirement already satisfied: scipy>=1.4.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-image) (1.6.3)Requirement already satisfied: packaging>=20.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-image) (21.3)Requirement already satisfied: networkx>=2.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-image) (2.4)Requirement already satisfied: numpy>=1.17.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-image) (1.19.5)Collecting tifffile>=2019.7.26 Downloading https://pypi.tuna.tsinghua.edu.cn/packages/d8/38/85ae5ed77598ca90558c17a2f79ddaba33173b31cf8d8f545d34d9134f0d/tifffile-2021.11.2-py3-none-any.whl (178 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 178.9/178.9 kB 25.2 kB/s eta 0:00:00a 0:00:01Requirement already satisfied: pillow!=7.1.0,!=7.1.1,!=8.3.0,>=6.1.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-image) (8.2.0)Collecting PyWavelets>=1.1.1 Downloading https://pypi.tuna.tsinghua.edu.cn/packages/ae/56/4441877073d8a5266dbf7b04c7f3dc66f1149c8efb9323e0ef987a9bb1ce/PyWavelets-1.3.0-cp37-cp37m-manylinux_2_5_x86_64.manylinux1_x86_64.manylinux_2_12_x86_64.manylinux2010_x86_64.whl (6.4 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 6.4/6.4 MB 1.3 MB/s eta 0:00:0000:0100:01Requirement already satisfied: imageio>=2.4.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-image) (2.6.1)Requirement already satisfied: decorator>=4.3.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from networkx>=2.2->scikit-image) (4.4.2)Requirement already satisfied: pyparsing!=3.0.5,>=2.0.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from packaging>=20.0->scikit-image) (3.0.9)Installing collected packages: tifffile, PyWavelets, scikit-imageSuccessfully installed PyWavelets-1.3.0 scikit-image-0.19.3 tifffile-2021.11.2[notice] A new release of pip available: 22.1.2 -> 22.3[notice] To update, run: pip install --upgrade pip登录后复制 ? ? ? ?

5. 快速开始

配置文件在work/options下,可修改学习率、batch_size等参数

5.1 模型训练

为更好的体验,建议使用单机多卡训练,例如fork并运行脚本任务: https://aistudio.baidu.com/aistudio/clusterprojectdetail/3792518

In [?]# 单机单卡!cd work && python main_train_psnr.py --opt options/train_swinir_multi_card_32.json登录后复制 ? ? ? ?