基于PaddleSeg与BiSeNet的自动驾驶道路分割模型

时间:2025-07-23 | 作者: | 阅读:0本次项目根据要求训练一个基于飞桨PaddleSeg的自动驾驶道路分割模型,采用实时语义分割网络BiSeNet,使用多个经预处理的训练集进行训练,并从多角度对模型进行评估与可视化,最后保存导出

基于PaddleSeg的自动驾驶道路分割模型

车道线分割是图像分割领域的热点问题,在自动驾驶领域有着重要应用。

本次项目根据要求训练一个基于飞桨PaddleSeg的自动驾驶道路分割模型,采用实时语义分割网络BiSeNet,使用多个经预处理的训练集进行训练,并在训练结束后从多角度对模型进行评估与可视化,最后保存导出模型,以备科研实训下一阶段使用。

选择main.ipynb进入此项目

请务必选择2022_0723_Release3版本打开项目。

请务必选择GPU版本运行此项目

以下为完整的模型训练过程,逐段执行即可完成数据集准备、模型训练、模型评估与可视化、模型保存导出全过程。

所有代码均可直接按顺序运行,由于AI Studio自身的技术原因,部分代码底部有红色波浪线,它们同样可以正常运行

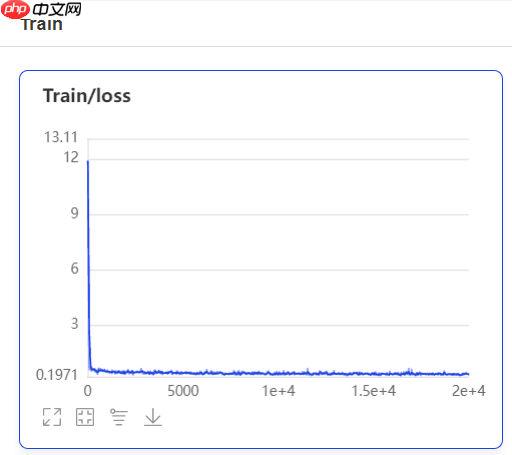

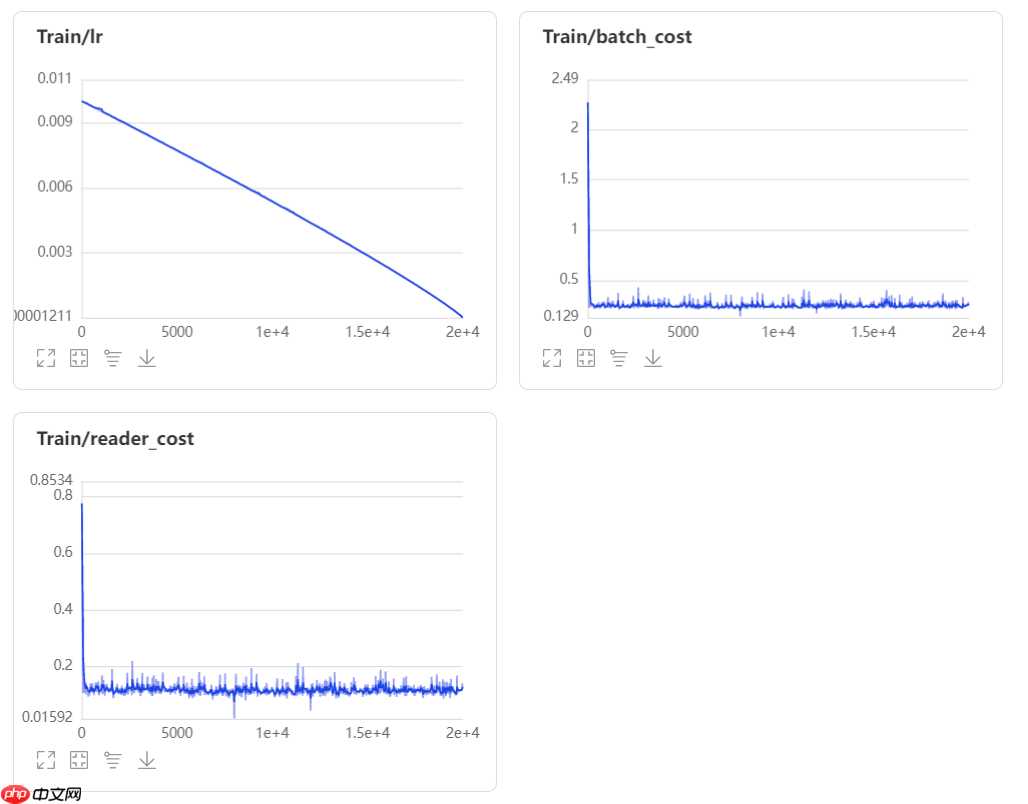

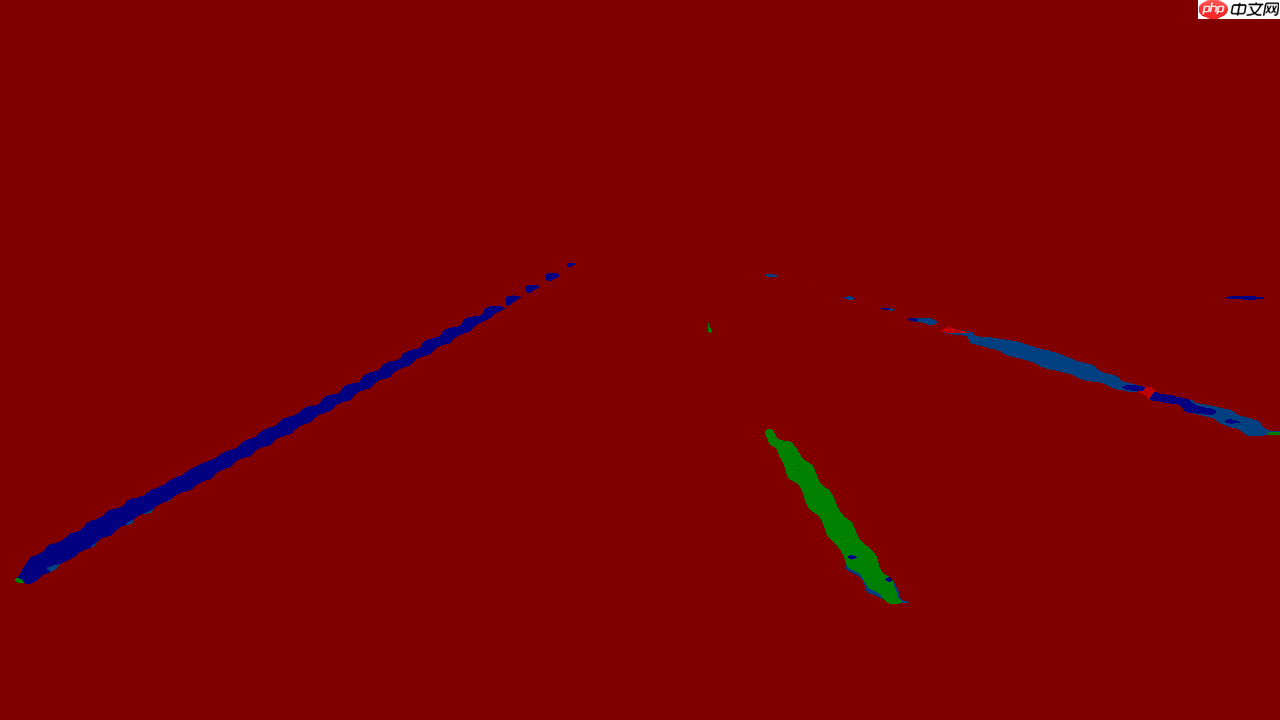

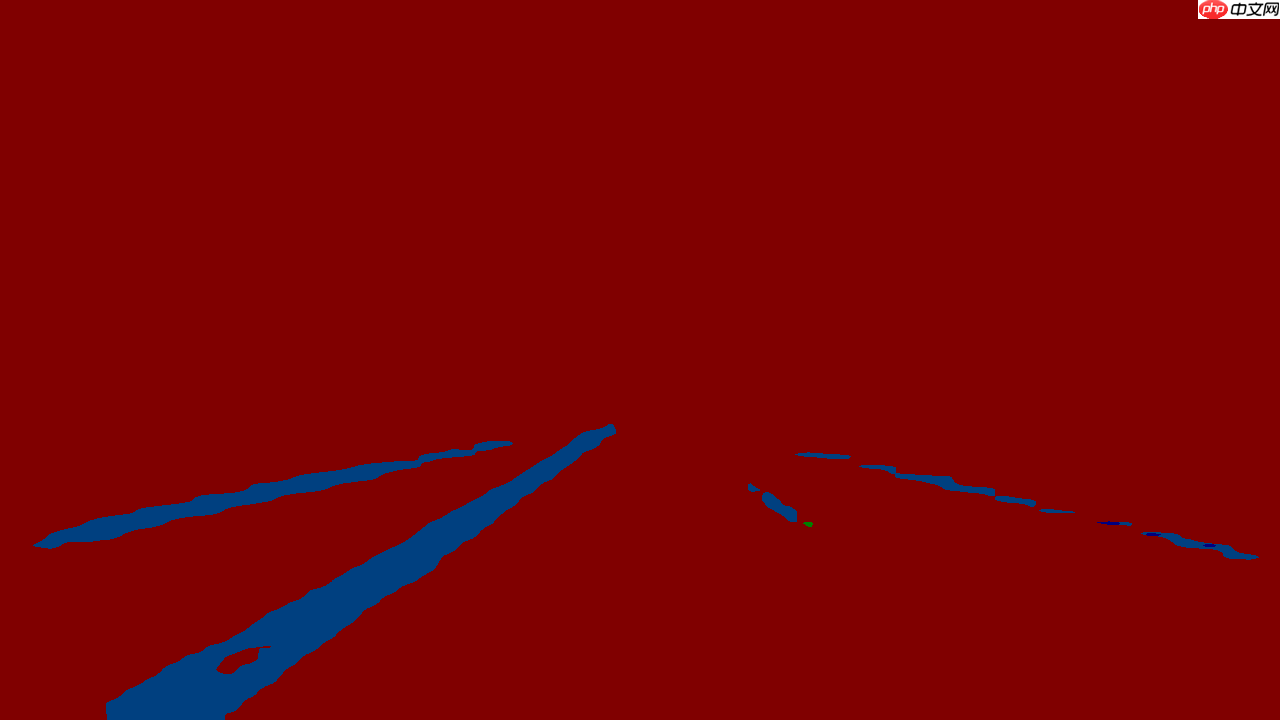

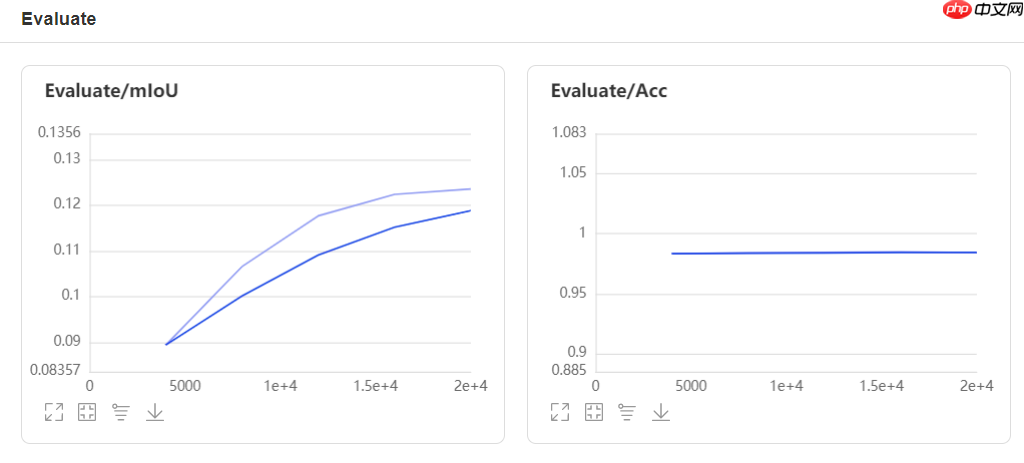

在模型可视化部分,我们提供了发布前最后一次训练的可视化图表,如果您希望生成查看自己训练的模型的可视化结果,可以在左侧点击可视化按钮,并按照指引选择logdir与自己的模型文件,即可生成当次训练评估的模型可视化图表

山东大学数据科学与人工智能实验班

小组成员:陈其轩 王启帆 何金原 高梓又

参考资料:

PaddleSeg实战——人像分割(最新版) https://aistudio.baidu.com/aistudio/projectdetail/2189481

轻量级实时语义分割经典BiSeNet及其进化BiSeNet v2 https://zhuanlan.zhihu.com/p/141692672

BiSeNet: Bilateral Segmentation Network for Real-time Semantic Segmentation

1.PaddleSeg安装与环境配置

PaddleSeg是基于飞桨PaddlePaddle开发的端到端图像分割开发套件,涵盖了高精度和轻量级等不同方向的大量高质量分割模型。通过模块化的设计,提供了配置化驱动和API调用两种应用方式,帮助开发者更便捷地完成从训练到部署的全流程图像分割应用。登录后复制 ? ?In [1]

#使用git下载paddleseg # github地址#! git clone https://github.com/PaddlePaddle/PaddleSeg# gitee地址,推荐! git clone https://gitee.com/paddlepaddle/PaddleSeg.git登录后复制 ? ? ? ?

正克隆到 'PaddleSeg'...remote: Enumerating objects: 18055, done.remote: Counting objects: 100% (3018/3018), done.remote: Compressing objects: 100% (1534/1534), done.remote: Total 18055 (delta 1698), reused 2505 (delta 1435), pack-reused 15037接收对象中: 100% (18055/18055), 341.84 MiB | 11.88 MiB/s, 完成.处理 delta 中: 100% (11562/11562), 完成.检查连接... 完成。登录后复制 ? ? ? ?In [2]

#安装环境!pip install -r PaddleSeg/requirements.txt#运行验证 为节省时间可跳过#! python train.py --config configs/quick_start/bisenet_optic_disc_512x512_1k.yml登录后复制 ? ? ? ?

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simpleRequirement already satisfied: pyyaml>=5.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from -r PaddleSeg/requirements.txt (line 1)) (5.1.2)Requirement already satisfied: visualdl>=2.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from -r PaddleSeg/requirements.txt (line 2)) (2.3.0)Requirement already satisfied: opencv-python in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from -r PaddleSeg/requirements.txt (line 3)) (4.1.1.26)Requirement already satisfied: tqdm in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from -r PaddleSeg/requirements.txt (line 4)) (4.27.0)Requirement already satisfied: filelock in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from -r PaddleSeg/requirements.txt (line 5)) (3.0.12)Requirement already satisfied: scipy in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from -r PaddleSeg/requirements.txt (line 6)) (1.6.3)Requirement already satisfied: prettytable in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from -r PaddleSeg/requirements.txt (line 7)) (0.7.2)Requirement already satisfied: sklearn in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from -r PaddleSeg/requirements.txt (line 8)) (0.0)Requirement already satisfied: numpy in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (1.19.5)Requirement already satisfied: matplotlib in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (2.2.3)Requirement already satisfied: flask>=1.1.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (1.1.1)Requirement already satisfied: Flask-Babel>=1.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (1.0.0)Requirement already satisfied: protobuf>=3.11.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (3.20.0)Requirement already satisfied: pandas in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (1.1.5)Requirement already satisfied: requests in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (2.24.0)Requirement already satisfied: six>=1.14.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (1.16.0)Requirement already satisfied: Pillow>=7.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (8.2.0)Requirement already satisfied: bce-python-sdk in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (0.8.53)Requirement already satisfied: scikit-learn in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from sklearn->-r PaddleSeg/requirements.txt (line 8)) (0.24.2)Requirement already satisfied: click>=5.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.1->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (7.0)Requirement already satisfied: Jinja2>=2.10.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.1->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (3.0.0)Requirement already satisfied: itsdangerous>=0.24 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.1->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (1.1.0)Requirement already satisfied: Werkzeug>=0.15 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.1->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (0.16.0)Requirement already satisfied: Babel>=2.3 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from Flask-Babel>=1.0.0->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (2.8.0)Requirement already satisfied: pytz in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from Flask-Babel>=1.0.0->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (2019.3)Requirement already satisfied: pycryptodome>=3.8.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from bce-python-sdk->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (3.9.9)Requirement already satisfied: future>=0.6.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from bce-python-sdk->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (0.18.0)Requirement already satisfied: pyparsing!=2.0.4,!=2.1.2,!=2.1.6,>=2.0.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from matplotlib->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (3.0.9)Requirement already satisfied: cycler>=0.10 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from matplotlib->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (0.10.0)Requirement already satisfied: python-dateutil>=2.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from matplotlib->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (2.8.2)Requirement already satisfied: kiwisolver>=1.0.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from matplotlib->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (1.1.0)Requirement already satisfied: urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (1.25.6)Requirement already satisfied: idna<3,>=2.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (2.8)Requirement already satisfied: certifi>=2017.4.17 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (2019.9.11)Requirement already satisfied: chardet<4,>=3.0.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (3.0.4)Requirement already satisfied: joblib>=0.11 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-learn->sklearn->-r PaddleSeg/requirements.txt (line 8)) (0.14.1)Requirement already satisfied: threadpoolctl>=2.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-learn->sklearn->-r PaddleSeg/requirements.txt (line 8)) (2.1.0)Requirement already satisfied: MarkupSafe>=2.0.0rc2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from Jinja2>=2.10.1->flask>=1.1.1->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (2.0.1)Requirement already satisfied: setuptools in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from kiwisolver>=1.0.1->matplotlib->visualdl>=2.0.0->-r PaddleSeg/requirements.txt (line 2)) (56.2.0)登录后复制 ? ? ? ?

2.数据集准备

2.1数据集选取

为满足尽可能多使用数据集的作业要求,本项目共挂载4个数据集。由于AI Studio对挂载数据集数量的限制(最多可挂载2个数据集),我们将其中三个数据进行处理制作为一个大型数据集集合并进行挂载。所用到的数据集分别为:登录后复制 ? ? ? ?

车道线检测-初赛https://aistudio.baidu.com/aistudio/datasetdetail/54076

车道线检测数据集https://aistudio.baidu.com/aistudio/datasetdetail/68698

2020中国华录杯·数据湖算法大赛—定向算法赛(车道线识别)https://aistudio.baidu.com/aistudio/datasetdetail/54289

智能车2022baseline数据集 https://aistudio.baidu.com/aistudio/datasetdetail/125507

2.2数据集结构整理

在训练自定义数据集前,我们将数据集转化为以下结构:

custom_dataset | |--images | |--image1.jpg | |--image2.jpg | |--... | |--labels | |--label1.jpg | |--label2.png | |--... | |--train.txt | |--val.txt | |--test.txt登录后复制 ? ? ? ?

其中train.txt和val.txt的内容如下:

images/image1.jpg labels/label1.pngimages/image2.jpg labels/label2.png...登录后复制 ? ?

2.3yml文件修改与参数调整

在完成上述操作后,我们需要将自定义数据集的信息写入yml文件

应修改的文件地址:/home/aistudio/PaddleSeg/configs/quick_start/bisenet_optic_disc_512x512_1k.yml

此处我们已经编写好yml文件,使用Linux命令行对原有yml文件进行替换即可

In [3]! mv bisenet_optic_disc_512x512_1k.yml /home/aistudio/PaddleSeg/configs/quick_start/登录后复制 ? ?

以该yml文件为示例讲解yml文件的编写方法:

batch_size: 4 #一次训练所抓取的数据样本数量iters: 20000 #迭代次数train_dataset: type: Dataset dataset_root: /home/aistudio/custom_dataset/dataset2 #train.txt所在目录 train_path: /home/aistudio/custom_dataset/dataset2/train.txt #train.txt地址 num_classes: 20 #分类数量 transforms: #图像处理 - type: Resize target_size: [512, 512] #Resize大小 - type: RandomHorizontalFlip #图像增广方法 - type: Normalize #正则化 mode: trainval_dataset: type: Dataset dataset_root: /home/aistudio/custom_dataset/dataset2 #val.txt所在目录 val_path: /home/aistudio/custom_dataset/dataset2/val.txt #val.txt地址 num_classes: 20 #分类数量 transforms: #图像处理 - type: Resize target_size: [512, 512] #Resize大小 - type: Normalize #正则化 mode: valoptimizer: #优化器 type: sgd #随机梯度下降 momentum: 0.9 #momentum动量法 weight_decay: 4.0e-5 #权重衰减lr_scheduler: #学习率调节 type: PolynomialDecay #多项式衰减 learning_rate: 0.01 #学习率 end_lr: 0 power: 0.9loss: #损失函数 types: - type: CrossEntropyLoss #交叉熵损失函数 coef: [1, 1, 1, 1, 1]model: type: BiSeNetV2 #BiSeNet pretrained: Null登录后复制 ? ?

2.4解压数据集

In [4]# 利用Python解压数据集集合! python /home/aistudio/unzip.py登录后复制 ? ?

3.模型训练

3.1模型选择

3.1.1轻量级语义分割

基于轻量化网络模型的设计作为一个热门的研究方法,许多研究者都在运算量、参数量和精度之间寻找平衡,希望使用尽量少的运算量和参数量的同时获得较高的模型精度。目前,轻量级模型主要有SqueezeNet、MobileNet系列和ShuffleNet系列等,这些模型在图像分类领域取得了不错的效果,可以作为基本的主干网络应用于语义分割任务当中。

然而,在语义分割领域,由于需要对输入图片进行逐像素的分类,运算量很大。通常,为了减少语义分割所产生的计算量,通常而言有两种方式:减小图片大小和降低模型复杂度。减小图片大小可以最直接地减少运算量,但是图像会丢失掉大量的细节从而影响精度。降低模型复杂度则会导致模型的特征提取能力减弱,从而影响分割精度。所以,如何在语义分割任务中应用轻量级模型,兼顾实时性和精度性能具有相当大的挑战性。

3.1.2主流加速方式

当前主要有三种加速方法:

- 通过剪裁或 resize 来限定输入大小,以降低计算复杂度。尽管这种方法简单而有效,空间细节的损失还是让预测打了折扣,尤其是边界部分,导致度量和可视化的精度下降;

- 通过减少网络通道数量加快处理速度,尤其是在骨干模型的早期阶段,但是这会弱化空间信息。

- 为追求极其紧凑的框架而丢弃模型的最后阶段(比如ENet)。该方法的缺点也很明显:由于 ENet 抛弃了最后阶段的下采样,模型的感受野不足以涵盖大物体,导致判别能力较差。

这些提速的方法会丢失很多 Spatial Details 或者牺牲 Spatial Capacity,从而导致精度大幅下降。为了弥补空间信息的丢失,有些算法会采用 U-shape 的方式恢复空间信息。但是,U-shape 会降低速度,同时很多丢失的信息并不能简单地通过融合浅层特征来恢复。

3.1.3BiSeNet

BiSeNet: Bilateral Segmentation Network for Real-time Semantic Segmentation中出了一种新的双向分割网络BiSeNet。首先,设计了一个带有小步长的空间路径来保留空间位置信息生成高分辨率的特征图;同时设计了一个带有快速下采样率的语义路径来获取客观的感受野。在这两个模块之上引入一个新的特征融合模块将二者的特征图进行融合,实现速度和精度的平衡。

3.2BiSeNet模型训练

In [5]# 进行模型训练! python /home/aistudio/PaddleSeg/train.py --config /home/aistudio/PaddleSeg/configs/quick_start/bisenet_optic_disc_512x512_1k.yml --do_eval --use_vdl --save_interval 4000 --save_dir output登录后复制 ? ? ? ?