手把手教你部署一个蘑菇识别的小应用

时间:2025-07-24 | 作者: | 阅读:0本文介绍使用飞桨EasyEdge平台部署蘑菇分类模型的流程。先定义图像分类任务,解压并标注蘑菇数据集,划分训练集和验证集,定义数据集类并做图像增强。选用mobilenet_v2网络,配置优化器训练模型,最后保存为静态图,通过EasyEdge平台完成部署,操作简洁。

项目背景

飞桨最近新上了EasyEdge端与边缘AI服务平台,这对于新手来说非常友好

在原来我们开发了模型之后,没有办法快速部署到手机上

现在有了EasyEdge这个平台,直接在EasyEdge端与边缘AI服务平台部署就可以了,操作十分简洁流畅!下面以一个示例来给大家展示:

① 问题定义

对于一个任务,当你想使用深度学习来解决时,一般流程如下:

①问题定义->②数据准备->③模型选择与开发->④模型训练和调优->⑤模型评估测试->⑥部署上线

本项目中的蘑菇的分类的本质是图像分类任务,采用轻量级卷积神经网络mobilenet_v2进行相关实践。

② 数据准备

2.1 解压缩数据集

我们将网上获取的数据集以压缩包的方式上传到aistudio数据集中,并加载到我们的项目内。

在使用之前我们进行数据集压缩包的一个解压。

In [?]# !unzip -oq /home/aistudio/data/data81902/mushrooms_train.zip -d work/登录后复制 ? ?In [?]

import paddlepaddle.seed(8888)import numpy as npfrom typing import Callable#参数配置config_parameters = { ”class_dim“: 9, #分类数 ”target_path“:”/home/aistudio/work/“, 'train_image_dir': '/home/aistudio/work/trainImages', 'eval_image_dir': '/home/aistudio/work/evalImages', 'epochs':100, 'batch_size': 128, 'lr': 0.01}登录后复制 ? ?

2.2 数据标注

我们先看一下解压缩后的数据集长成什么样子。

In [?]import osimport randomfrom matplotlib import pyplot as pltfrom PIL import Imageimgs = []paths = os.listdir('work/mushrooms_train')for path in paths: img_path = os.path.join('work/mushrooms_train', path) if os.path.isdir(img_path): img_paths = os.listdir(img_path) img = Image.open(os.path.join(img_path, random.choice(img_paths))) imgs.append((img, path))f, ax = plt.subplots(3, 3, figsize=(12,12))for i, img in enumerate(imgs[:9]): ax[i//3, i%3].imshow(img[0]) ax[i//3, i%3].axis('off') ax[i//3, i%3].set_title('label: %s' % img[1])plt.show()登录后复制 ? ? ? ?

<Figure size 864x864 with 9 Axes>登录后复制 ? ? ? ? ? ? ? ?

2.3 划分数据集与数据集的定义

接下来我们使用标注好的文件进行数据集类的定义,方便后续模型训练使用。

2.3.1 划分数据集

In [?]# import os# import shutil# train_dir = config_parameters['train_image_dir']# eval_dir = config_parameters['eval_image_dir']# paths = os.listdir('work/mushrooms_train')# if not os.path.exists(train_dir):# os.mkdir(train_dir)# if not os.path.exists(eval_dir):# os.mkdir(eval_dir)# for path in paths:# imgs_dir = os.listdir(os.path.join('work/mushrooms_train', path))# target_train_dir = os.path.join(train_dir,path)# target_eval_dir = os.path.join(eval_dir,path)# if not os.path.exists(target_train_dir):# os.mkdir(target_train_dir)# if not os.path.exists(target_eval_dir):# os.mkdir(target_eval_dir)# for i in range(len(imgs_dir)):# if ' ' in imgs_dir[i]:# new_name = imgs_dir[i].replace(' ', '_')# else:# new_name = imgs_dir[i]# target_train_path = os.path.join(target_train_dir, new_name)# target_eval_path = os.path.join(target_eval_dir, new_name) # if i % 5 == 0:# shutil.copyfile(os.path.join(os.path.join('work/mushrooms_train', path), imgs_dir[i]), target_eval_path)# else:# shutil.copyfile(os.path.join(os.path.join('work/mushrooms_train', path), imgs_dir[i]), target_train_path)# # print('finished train val split!')登录后复制 ? ?

2.3.2 导入数据集的定义实现

In [?]#数据集的定义class TowerDataset(paddle.io.Dataset): ”“” 步骤一:继承paddle.io.Dataset类 “”“ def __init__(self, transforms: Callable, mode: str ='train'): ”“” 步骤二:实现构造函数,定义数据读取方式 “”“ super(TowerDataset, self).__init__() self.mode = mode self.transforms = transforms train_image_dir = config_parameters['train_image_dir'] eval_image_dir = config_parameters['eval_image_dir'] train_data_folder = paddle.vision.DatasetFolder(train_image_dir) eval_data_folder = paddle.vision.DatasetFolder(eval_image_dir) if self.mode == 'train': self.data = train_data_folder elif self.mode == 'eval': self.data = eval_data_folder def __getitem__(self, index): ”“” 步骤三:实现__getitem__方法,定义指定index时如何获取数据,并返回单条数据(训练数据,对应的标签) “”“ data = np.array(self.data[index][0]).astype('float32') data = self.transforms(data) label = np.array([self.data[index][1]]).astype('int64') return data, label def __len__(self): ”“” 步骤四:实现__len__方法,返回数据集总数目 “”“ return len(self.data)登录后复制 ? ?

2.3.3 实例化数据集类及图像增强

根据所使用的数据集需求实例化数据集类,并查看总样本量。

In [?]from paddle.vision import transforms as T#数据增强transform_train =T.Compose([T.Resize((256,256)), T.RandomHorizontalFlip(10), T.RandomRotation(10), T.Transpose(), T.Normalize(mean=[0, 0, 0], # 像素值归一化 std =[255, 255, 255]), # transforms.ToTensor(), # transpose操作 + (img / 255),并且数据结构变为PaddleTensor T.Normalize(mean=[0.50950350, 0.54632660, 0.57409690],# 减均值 除标准差 std= [0.26059777, 0.26041326, 0.29220656])# 计算过程:output[channel] = (input[channel] - mean[channel]) / std[channel] ])transform_eval =T.Compose([ T.Resize((256,256)), T.Transpose(), T.Normalize(mean=[0, 0, 0], # 像素值归一化 std =[255, 255, 255]), # transforms.ToTensor(), # transpose操作 + (img / 255),并且数据结构变为PaddleTensor T.Normalize(mean=[0.50950350, 0.54632660, 0.57409690],# 减均值 除标准差 std= [0.26059777, 0.26041326, 0.29220656])# 计算过程:output[channel] = (input[channel] - mean[channel]) / std[channel] ])登录后复制 ? ?In [?]

train_dataset = TowerDataset(mode='train',transforms=transform_train)eval_dataset = TowerDataset(mode='eval', transforms=transform_eval )#数据异步加载train_loader = paddle.io.DataLoader(train_dataset, places=paddle.CUDAPlace(0), batch_size=128, shuffle=True, #num_workers=2, #use_shared_memory=True )eval_loader = paddle.io.DataLoader (eval_dataset, places=paddle.CUDAPlace(0), batch_size=128, #num_workers=2, #use_shared_memory=True )登录后复制 ? ?In [?]

print('训练集样本量: {},验证集样本量: {}'.format(len(train_loader), len(eval_loader)))登录后复制 ? ? ? ?

训练集样本量: 42,验证集样本量: 11登录后复制 ? ? ? ?

③ 模型选择和开发

3.1 网络构建

本次我们使用mobilenet_v2网络来完成我们的案例实践。

In [?]network=paddle.vision.models.mobilenet_v2(pretrained=True,num_classes=9)model=paddle.Model(network)model.summary((-1, 3, 256, 256))登录后复制 ? ? ? ?

2021-06-03 21:51:44,710 - INFO - unique_endpoints {''}2021-06-03 21:51:44,711 - INFO - Downloading mobilenet_v2_x1.0.pdparams from https://paddle-hapi.bj.bcebos.com/models/mobilenet_v2_x1.0.pdparams100%|██████████| 20795/20795 [00:00<00:00, 22406.24it/s]2021-06-03 21:51:46,068 - INFO - File /home/aistudio/.cache/paddle/hapi/weights/mobilenet_v2_x1.0.pdparams md5 checking.../opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1303: UserWarning: Skip loading for classifier.1.weight. classifier.1.weight receives a shape [1280, 1000], but the expected shape is [1280, 9]. warnings.warn((”Skip loading for {}. “.format(key) + str(err)))/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1303: UserWarning: Skip loading for classifier.1.bias. classifier.1.bias receives a shape [1000], but the expected shape is [9]. warnings.warn((”Skip loading for {}. “.format(key) + str(err)))登录后复制 ? ? ? ?

------------------------------------------------------------------------------- Layer (type) Input Shape Output Shape Param # =============================================================================== Conv2D-1 [[1, 3, 256, 256]] [1, 32, 128, 128] 864 BatchNorm2D-1 [[1, 32, 128, 128]] [1, 32, 128, 128] 128 ReLU6-1 [[1, 32, 128, 128]] [1, 32, 128, 128] 0 Conv2D-2 [[1, 32, 128, 128]] [1, 32, 128, 128] 288 BatchNorm2D-2 [[1, 32, 128, 128]] [1, 32, 128, 128] 128 ReLU6-2 [[1, 32, 128, 128]] [1, 32, 128, 128] 0 Conv2D-3 [[1, 32, 128, 128]] [1, 16, 128, 128] 512 BatchNorm2D-3 [[1, 16, 128, 128]] [1, 16, 128, 128] 64 InvertedResidual-1 [[1, 32, 128, 128]] [1, 16, 128, 128] 0 Conv2D-4 [[1, 16, 128, 128]] [1, 96, 128, 128] 1,536 BatchNorm2D-4 [[1, 96, 128, 128]] [1, 96, 128, 128] 384 ReLU6-3 [[1, 96, 128, 128]] [1, 96, 128, 128] 0 Conv2D-5 [[1, 96, 128, 128]] [1, 96, 64, 64] 864 BatchNorm2D-5 [[1, 96, 64, 64]] [1, 96, 64, 64] 384 ReLU6-4 [[1, 96, 64, 64]] [1, 96, 64, 64] 0 Conv2D-6 [[1, 96, 64, 64]] [1, 24, 64, 64] 2,304 BatchNorm2D-6 [[1, 24, 64, 64]] [1, 24, 64, 64] 96 InvertedResidual-2 [[1, 16, 128, 128]] [1, 24, 64, 64] 0 Conv2D-7 [[1, 24, 64, 64]] [1, 144, 64, 64] 3,456 BatchNorm2D-7 [[1, 144, 64, 64]] [1, 144, 64, 64] 576 ReLU6-5 [[1, 144, 64, 64]] [1, 144, 64, 64] 0 Conv2D-8 [[1, 144, 64, 64]] [1, 144, 64, 64] 1,296 BatchNorm2D-8 [[1, 144, 64, 64]] [1, 144, 64, 64] 576 ReLU6-6 [[1, 144, 64, 64]] [1, 144, 64, 64] 0 Conv2D-9 [[1, 144, 64, 64]] [1, 24, 64, 64] 3,456 BatchNorm2D-9 [[1, 24, 64, 64]] [1, 24, 64, 64] 96 InvertedResidual-3 [[1, 24, 64, 64]] [1, 24, 64, 64] 0 Conv2D-10 [[1, 24, 64, 64]] [1, 144, 64, 64] 3,456 BatchNorm2D-10 [[1, 144, 64, 64]] [1, 144, 64, 64] 576 ReLU6-7 [[1, 144, 64, 64]] [1, 144, 64, 64] 0 Conv2D-11 [[1, 144, 64, 64]] [1, 144, 32, 32] 1,296 BatchNorm2D-11 [[1, 144, 32, 32]] [1, 144, 32, 32] 576 ReLU6-8 [[1, 144, 32, 32]] [1, 144, 32, 32] 0 Conv2D-12 [[1, 144, 32, 32]] [1, 32, 32, 32] 4,608 BatchNorm2D-12 [[1, 32, 32, 32]] [1, 32, 32, 32] 128 InvertedResidual-4 [[1, 24, 64, 64]] [1, 32, 32, 32] 0 Conv2D-13 [[1, 32, 32, 32]] [1, 192, 32, 32] 6,144 BatchNorm2D-13 [[1, 192, 32, 32]] [1, 192, 32, 32] 768 ReLU6-9 [[1, 192, 32, 32]] [1, 192, 32, 32] 0 Conv2D-14 [[1, 192, 32, 32]] [1, 192, 32, 32] 1,728 BatchNorm2D-14 [[1, 192, 32, 32]] [1, 192, 32, 32] 768 ReLU6-10 [[1, 192, 32, 32]] [1, 192, 32, 32] 0 Conv2D-15 [[1, 192, 32, 32]] [1, 32, 32, 32] 6,144 BatchNorm2D-15 [[1, 32, 32, 32]] [1, 32, 32, 32] 128 InvertedResidual-5 [[1, 32, 32, 32]] [1, 32, 32, 32] 0 Conv2D-16 [[1, 32, 32, 32]] [1, 192, 32, 32] 6,144 BatchNorm2D-16 [[1, 192, 32, 32]] [1, 192, 32, 32] 768 ReLU6-11 [[1, 192, 32, 32]] [1, 192, 32, 32] 0 Conv2D-17 [[1, 192, 32, 32]] [1, 192, 32, 32] 1,728 BatchNorm2D-17 [[1, 192, 32, 32]] [1, 192, 32, 32] 768 ReLU6-12 [[1, 192, 32, 32]] [1, 192, 32, 32] 0 Conv2D-18 [[1, 192, 32, 32]] [1, 32, 32, 32] 6,144 BatchNorm2D-18 [[1, 32, 32, 32]] [1, 32, 32, 32] 128 InvertedResidual-6 [[1, 32, 32, 32]] [1, 32, 32, 32] 0 Conv2D-19 [[1, 32, 32, 32]] [1, 192, 32, 32] 6,144 BatchNorm2D-19 [[1, 192, 32, 32]] [1, 192, 32, 32] 768 ReLU6-13 [[1, 192, 32, 32]] [1, 192, 32, 32] 0 Conv2D-20 [[1, 192, 32, 32]] [1, 192, 16, 16] 1,728 BatchNorm2D-20 [[1, 192, 16, 16]] [1, 192, 16, 16] 768 ReLU6-14 [[1, 192, 16, 16]] [1, 192, 16, 16] 0 Conv2D-21 [[1, 192, 16, 16]] [1, 64, 16, 16] 12,288 BatchNorm2D-21 [[1, 64, 16, 16]] [1, 64, 16, 16] 256 InvertedResidual-7 [[1, 32, 32, 32]] [1, 64, 16, 16] 0 Conv2D-22 [[1, 64, 16, 16]] [1, 384, 16, 16] 24,576 BatchNorm2D-22 [[1, 384, 16, 16]] [1, 384, 16, 16] 1,536 ReLU6-15 [[1, 384, 16, 16]] [1, 384, 16, 16] 0 Conv2D-23 [[1, 384, 16, 16]] [1, 384, 16, 16] 3,456 BatchNorm2D-23 [[1, 384, 16, 16]] [1, 384, 16, 16] 1,536 ReLU6-16 [[1, 384, 16, 16]] [1, 384, 16, 16] 0 Conv2D-24 [[1, 384, 16, 16]] [1, 64, 16, 16] 24,576 BatchNorm2D-24 [[1, 64, 16, 16]] [1, 64, 16, 16] 256 InvertedResidual-8 [[1, 64, 16, 16]] [1, 64, 16, 16] 0 Conv2D-25 [[1, 64, 16, 16]] [1, 384, 16, 16] 24,576 BatchNorm2D-25 [[1, 384, 16, 16]] [1, 384, 16, 16] 1,536 ReLU6-17 [[1, 384, 16, 16]] [1, 384, 16, 16] 0 Conv2D-26 [[1, 384, 16, 16]] [1, 384, 16, 16] 3,456 BatchNorm2D-26 [[1, 384, 16, 16]] [1, 384, 16, 16] 1,536 ReLU6-18 [[1, 384, 16, 16]] [1, 384, 16, 16] 0 Conv2D-27 [[1, 384, 16, 16]] [1, 64, 16, 16] 24,576 BatchNorm2D-27 [[1, 64, 16, 16]] [1, 64, 16, 16] 256 InvertedResidual-9 [[1, 64, 16, 16]] [1, 64, 16, 16] 0 Conv2D-28 [[1, 64, 16, 16]] [1, 384, 16, 16] 24,576 BatchNorm2D-28 [[1, 384, 16, 16]] [1, 384, 16, 16] 1,536 ReLU6-19 [[1, 384, 16, 16]] [1, 384, 16, 16] 0 Conv2D-29 [[1, 384, 16, 16]] [1, 384, 16, 16] 3,456 BatchNorm2D-29 [[1, 384, 16, 16]] [1, 384, 16, 16] 1,536 ReLU6-20 [[1, 384, 16, 16]] [1, 384, 16, 16] 0 Conv2D-30 [[1, 384, 16, 16]] [1, 64, 16, 16] 24,576 BatchNorm2D-30 [[1, 64, 16, 16]] [1, 64, 16, 16] 256 InvertedResidual-10 [[1, 64, 16, 16]] [1, 64, 16, 16] 0 Conv2D-31 [[1, 64, 16, 16]] [1, 384, 16, 16] 24,576 BatchNorm2D-31 [[1, 384, 16, 16]] [1, 384, 16, 16] 1,536 ReLU6-21 [[1, 384, 16, 16]] [1, 384, 16, 16] 0 Conv2D-32 [[1, 384, 16, 16]] [1, 384, 16, 16] 3,456 BatchNorm2D-32 [[1, 384, 16, 16]] [1, 384, 16, 16] 1,536 ReLU6-22 [[1, 384, 16, 16]] [1, 384, 16, 16] 0 Conv2D-33 [[1, 384, 16, 16]] [1, 96, 16, 16] 36,864 BatchNorm2D-33 [[1, 96, 16, 16]] [1, 96, 16, 16] 384 InvertedResidual-11 [[1, 64, 16, 16]] [1, 96, 16, 16] 0 Conv2D-34 [[1, 96, 16, 16]] [1, 576, 16, 16] 55,296 BatchNorm2D-34 [[1, 576, 16, 16]] [1, 576, 16, 16] 2,304 ReLU6-23 [[1, 576, 16, 16]] [1, 576, 16, 16] 0 Conv2D-35 [[1, 576, 16, 16]] [1, 576, 16, 16] 5,184 BatchNorm2D-35 [[1, 576, 16, 16]] [1, 576, 16, 16] 2,304 ReLU6-24 [[1, 576, 16, 16]] [1, 576, 16, 16] 0 Conv2D-36 [[1, 576, 16, 16]] [1, 96, 16, 16] 55,296 BatchNorm2D-36 [[1, 96, 16, 16]] [1, 96, 16, 16] 384 InvertedResidual-12 [[1, 96, 16, 16]] [1, 96, 16, 16] 0 Conv2D-37 [[1, 96, 16, 16]] [1, 576, 16, 16] 55,296 BatchNorm2D-37 [[1, 576, 16, 16]] [1, 576, 16, 16] 2,304 ReLU6-25 [[1, 576, 16, 16]] [1, 576, 16, 16] 0 Conv2D-38 [[1, 576, 16, 16]] [1, 576, 16, 16] 5,184 BatchNorm2D-38 [[1, 576, 16, 16]] [1, 576, 16, 16] 2,304 ReLU6-26 [[1, 576, 16, 16]] [1, 576, 16, 16] 0 Conv2D-39 [[1, 576, 16, 16]] [1, 96, 16, 16] 55,296 BatchNorm2D-39 [[1, 96, 16, 16]] [1, 96, 16, 16] 384 InvertedResidual-13 [[1, 96, 16, 16]] [1, 96, 16, 16] 0 Conv2D-40 [[1, 96, 16, 16]] [1, 576, 16, 16] 55,296 BatchNorm2D-40 [[1, 576, 16, 16]] [1, 576, 16, 16] 2,304 ReLU6-27 [[1, 576, 16, 16]] [1, 576, 16, 16] 0 Conv2D-41 [[1, 576, 16, 16]] [1, 576, 8, 8] 5,184 BatchNorm2D-41 [[1, 576, 8, 8]] [1, 576, 8, 8] 2,304 ReLU6-28 [[1, 576, 8, 8]] [1, 576, 8, 8] 0 Conv2D-42 [[1, 576, 8, 8]] [1, 160, 8, 8] 92,160 BatchNorm2D-42 [[1, 160, 8, 8]] [1, 160, 8, 8] 640 InvertedResidual-14 [[1, 96, 16, 16]] [1, 160, 8, 8] 0 Conv2D-43 [[1, 160, 8, 8]] [1, 960, 8, 8] 153,600 BatchNorm2D-43 [[1, 960, 8, 8]] [1, 960, 8, 8] 3,840 ReLU6-29 [[1, 960, 8, 8]] [1, 960, 8, 8] 0 Conv2D-44 [[1, 960, 8, 8]] [1, 960, 8, 8] 8,640 BatchNorm2D-44 [[1, 960, 8, 8]] [1, 960, 8, 8] 3,840 ReLU6-30 [[1, 960, 8, 8]] [1, 960, 8, 8] 0 Conv2D-45 [[1, 960, 8, 8]] [1, 160, 8, 8] 153,600 BatchNorm2D-45 [[1, 160, 8, 8]] [1, 160, 8, 8] 640 InvertedResidual-15 [[1, 160, 8, 8]] [1, 160, 8, 8] 0 Conv2D-46 [[1, 160, 8, 8]] [1, 960, 8, 8] 153,600 BatchNorm2D-46 [[1, 960, 8, 8]] [1, 960, 8, 8] 3,840 ReLU6-31 [[1, 960, 8, 8]] [1, 960, 8, 8] 0 Conv2D-47 [[1, 960, 8, 8]] [1, 960, 8, 8] 8,640 BatchNorm2D-47 [[1, 960, 8, 8]] [1, 960, 8, 8] 3,840 ReLU6-32 [[1, 960, 8, 8]] [1, 960, 8, 8] 0 Conv2D-48 [[1, 960, 8, 8]] [1, 160, 8, 8] 153,600 BatchNorm2D-48 [[1, 160, 8, 8]] [1, 160, 8, 8] 640 InvertedResidual-16 [[1, 160, 8, 8]] [1, 160, 8, 8] 0 Conv2D-49 [[1, 160, 8, 8]] [1, 960, 8, 8] 153,600 BatchNorm2D-49 [[1, 960, 8, 8]] [1, 960, 8, 8] 3,840 ReLU6-33 [[1, 960, 8, 8]] [1, 960, 8, 8] 0 Conv2D-50 [[1, 960, 8, 8]] [1, 960, 8, 8] 8,640 BatchNorm2D-50 [[1, 960, 8, 8]] [1, 960, 8, 8] 3,840 ReLU6-34 [[1, 960, 8, 8]] [1, 960, 8, 8] 0 Conv2D-51 [[1, 960, 8, 8]] [1, 320, 8, 8] 307,200 BatchNorm2D-51 [[1, 320, 8, 8]] [1, 320, 8, 8] 1,280 InvertedResidual-17 [[1, 160, 8, 8]] [1, 320, 8, 8] 0 Conv2D-52 [[1, 320, 8, 8]] [1, 1280, 8, 8] 409,600 BatchNorm2D-52 [[1, 1280, 8, 8]] [1, 1280, 8, 8] 5,120 ReLU6-35 [[1, 1280, 8, 8]] [1, 1280, 8, 8] 0 AdaptiveAvgPool2D-1 [[1, 1280, 8, 8]] [1, 1280, 1, 1] 0 Dropout-1 [[1, 1280]] [1, 1280] 0 Linear-1 [[1, 1280]] [1, 9] 11,529 ===============================================================================Total params: 2,269,513Trainable params: 2,201,289Non-trainable params: 68,224-------------------------------------------------------------------------------Input size (MB): 0.75Forward/backward pass size (MB): 199.66Params size (MB): 8.66Estimated Total Size (MB): 209.07-------------------------------------------------------------------------------登录后复制 ? ? ? ?

{'total_params': 2269513, 'trainable_params': 2201289}登录后复制 ? ? ? ? ? ? ? ?

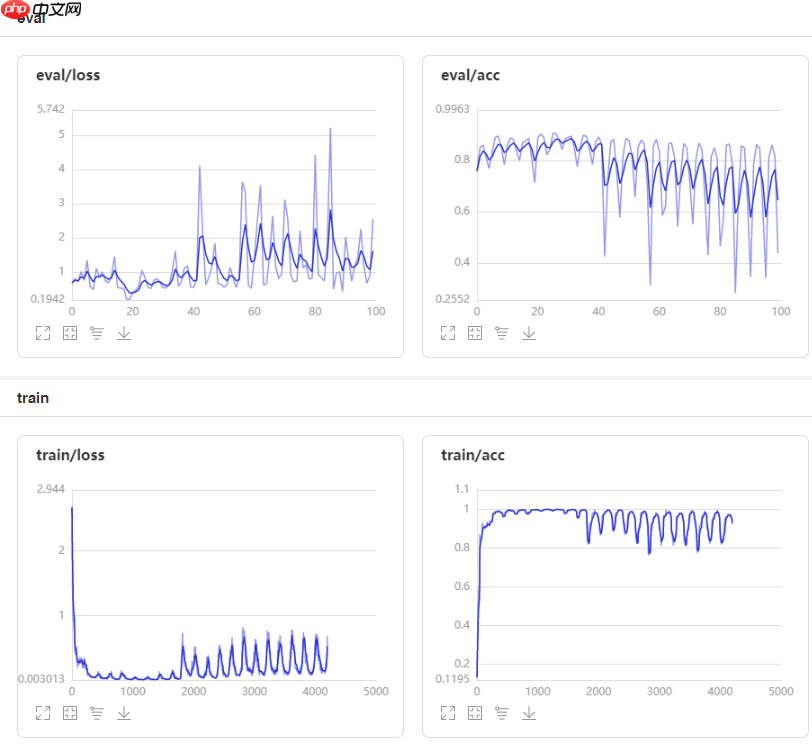

④ 模型训练和优化器的选择

In [?]#优化器选择class SaveBestModel(paddle.callbacks.Callback): def __init__(self, target=0.5, path='work/best_model', verbose=0): self.target = target self.epoch = None self.path = path def on_epoch_end(self, epoch, logs=None): self.epoch = epoch def on_eval_end(self, logs=None): if logs.get('acc') > self.target: self.target = logs.get('acc') self.model.save(self.path) print('best acc is {} at epoch {}'.format(self.target, self.epoch))callback_visualdl = paddle.callbacks.VisualDL(log_dir='work/mushroom')callback_savebestmodel = SaveBestModel(target=0.5, path='work/best_model')callbacks = [callback_visualdl, callback_savebestmodel]base_lr = config_parameters['lr']epochs = config_parameters['epochs']def make_optimizer(parameters=None): momentum = 0.9 learning_rate= paddle.optimizer.lr.CosineAnnealingDecay(learning_rate=base_lr, T_max=epochs, verbose=False) weight_decay=paddle.regularizer.L2Decay(0.0001) optimizer = paddle.optimizer.Momentum( learning_rate=learning_rate, momentum=momentum, weight_decay=weight_decay, parameters=parameters) return optimizeroptimizer = make_optimizer(model.parameters())登录后复制 ? ?In [?]

model.prepare(optimizer, paddle.nn.CrossEntropyLoss(), paddle.metric.Accuracy())登录后复制 ? ?In [14]

model.fit(train_loader, eval_loader, epochs=100, batch_size=128, # 是否打乱样本集 callbacks=callbacks, verbose=1) # 日志展示格式登录后复制 ? ?

⑤模型评估测试

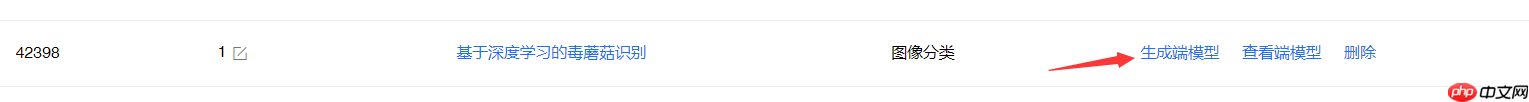

? ? ? ?⑥部署上线

将我们训练得到的模型进行保存为静态图,得到mushroom.pdmodel和mushroom.pdiparams两个文件,准备一个label_list.txt文件

In [15]model.save('mushroom',training=False)登录后复制 ? ?

6.1上传原模型

根据图示进行上传文件验证,生成Demo

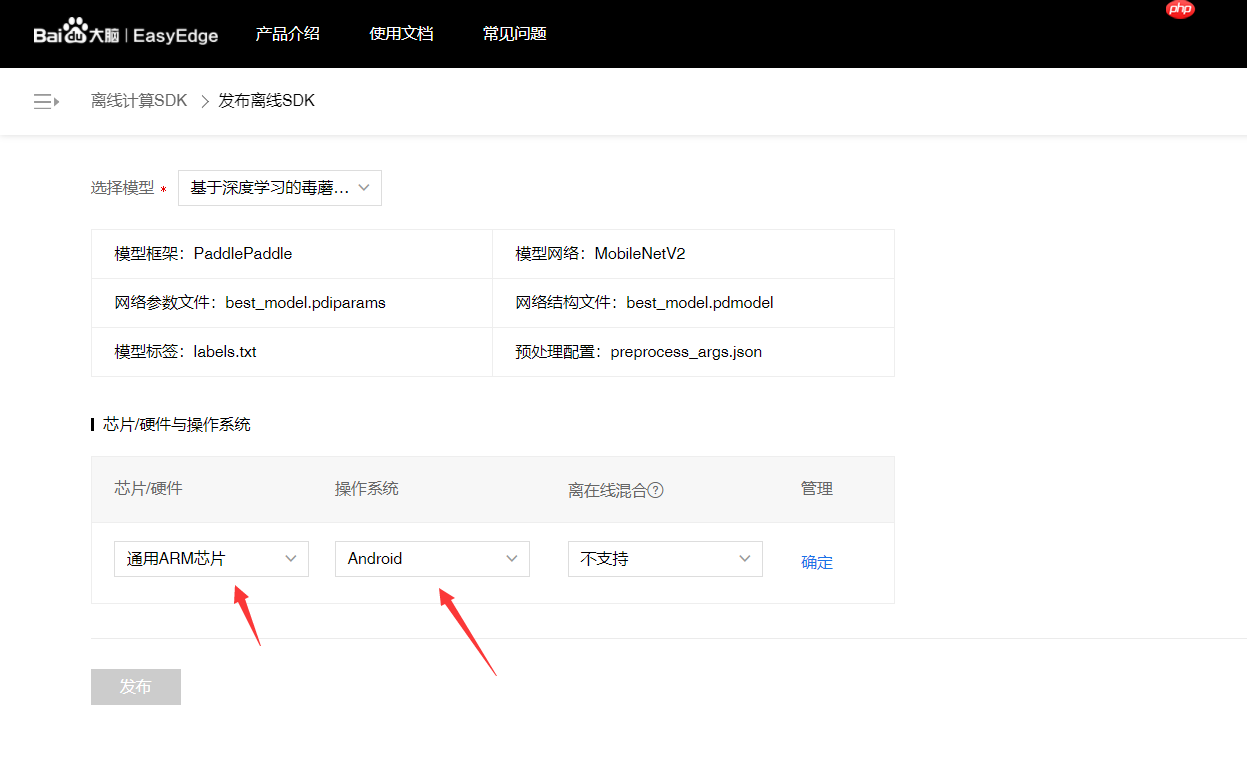

? ? ? ?6.2生成端模型

? ? ? ? ? ? ? ?6.3Demo下载体验

? ? ? ? ?

来源:https://www.php.cn/faq/1425865.html

免责声明:文中图文均来自网络,如有侵权请联系删除,心愿游戏发布此文仅为传递信息,不代表心愿游戏认同其观点或证实其描述。

相关文章

更多-

- nef 格式图片降噪处理用什么工具 效果如何

- 时间:2025-07-29

-

- 邮箱长时间未登录被注销了能恢复吗?

- 时间:2025-07-29

-

- Outlook收件箱邮件不同步怎么办?

- 时间:2025-07-29

-

- 为什么客户端收邮件总是延迟?

- 时间:2025-07-29

-

- 一英寸在磁带宽度中是多少 老式设备规格

- 时间:2025-07-29

-

- 大卡和年龄的关系 不同年龄段热量需求

- 时间:2025-07-29

-

- jif 格式是 gif 的变体吗 现在还常用吗

- 时间:2025-07-29

-

- hdr 格式图片在显示器上能完全显示吗 普通显示器有局限吗

- 时间:2025-07-29

大家都在玩

大家都在看

更多-

- UltraEdit怎么设置自动转换到DOS格式

- 时间:2025-10-13

-

- UltraEdit怎么关闭整字匹配

- 时间:2025-10-13

-

- 三角洲行动简单又好听游戏网名

- 时间:2025-10-13

-

- 抖音定时发布怎么取消?发布作品正确方法是什么?

- 时间:2025-10-13

-

- 小红书怎么注册新的账号?它起号运营怎么做?

- 时间:2025-10-13

-

- 快手评论被删除的原因怎么查?评论被删除有哪些原因?

- 时间:2025-10-13

-

- 不让别人看抖音收藏的音乐怎么弄?收藏的音乐在哪里找到?

- 时间:2025-10-13

-

- 小红书企业号认证流程是什么?企业号认证的条件是什么?

- 时间:2025-10-13