浅析 Swin Transformer

时间:2025-07-18 | 作者: | 阅读:0本文围绕Swin Transformer展开,介绍其作为通用视觉骨干网,采用层次化结构与移位窗口机制提升效率。解读其解决CV挑战的创新点,展示代码构造,包括MLP、窗口划分合并、注意力机制等模块,还给出模型定义、大小及在Cifar10上的训练情况,指出其收敛较慢。

Swin Transformer

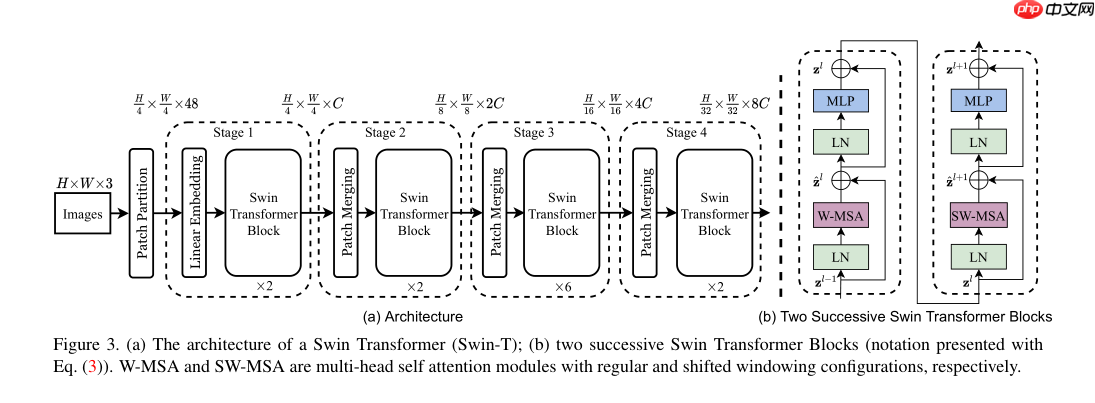

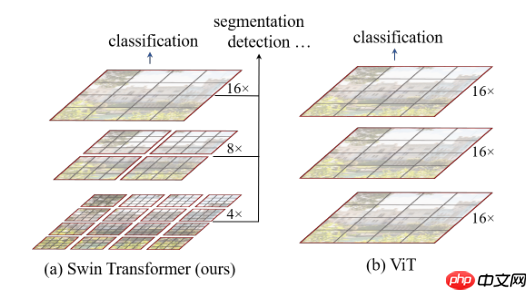

Swin Transformer?(the name?Swin?stands for?Shifted?window) is initially described in arxiv, which capably serves as a general-purpose backbone for computer vision. It is basically a hierarchical Transformer whose representation is computed with shifted windows. The shifted windowing scheme brings greater efficiency by limiting self-attention computation to non-overlapping local windows while also allowing for cross-window connection.

paper:https://arxiv.org/pdf/2103.14030.pdf

github:?https://github.com/microsoft/Swin-Transformer

前言

hi guy!欢迎来到这里,这里是对swin transformer的复现工作,众周所知transformer尽管在CV任务有着不俗的表现,但是这是牺牲了速度与算力为原则,这也是现在传统CNN没有被transformer取代的原因——CNN实在是太成熟了,目前业界不会冒风险并再挖坑填坑接纳transformer,而要想transformer替代CNN,必须要在各大CV任务上遥遥领先与传统CNN并且速度不亚于传统CNN,这样才会让业界重新花费代价去部署接纳transformer,这也是目前CV任务的研究热点,而这篇文章让人眼前一亮,让transformer替代CNN更接近了一步,这是一篇很好的论文,非常值得大家一读

? ? ? ?- 本代码基于官方Pytorch,保证原汁原味

- 复现与?寂寞你快进去?大佬撞车,不得不说大佬复现项目速度很快,要说不同就是本项目无需依赖PPIM

- 了解代码需要了解基本的 transformer,比如self attention,mha等,本文不做详细解释

论文解读

transformer在CV面临两个挑战

- 目标尺度不平衡

- 计算量太大

上述说明的更多是object detection和Semantic segmentation等,这些任务在目标尺度变化很大,所以之前object detection排行榜(COCO)一直都是Scaled-YOLOv4等传统CNN霸占着

为了解决上述问题,本文提出了两个创新点

- 引入CNN中常用的层次化构建方式构建层次化Transformer

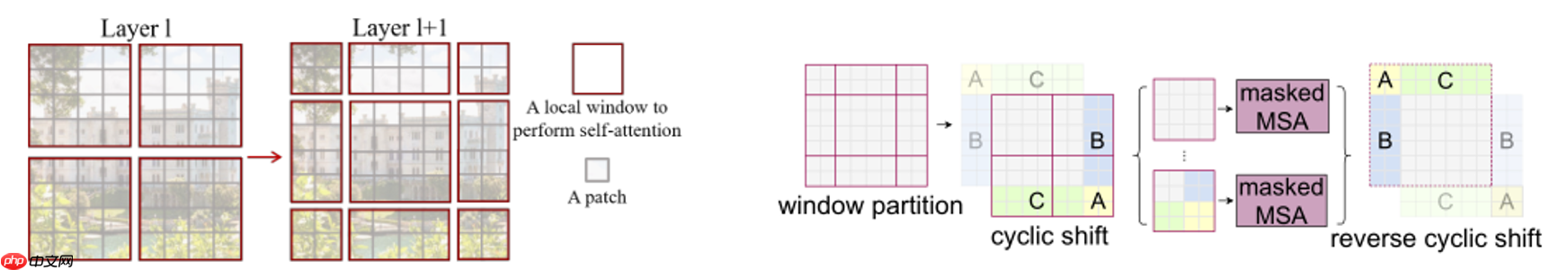

- 引入locality思想,对无重合的window区域内进行self-attention计算

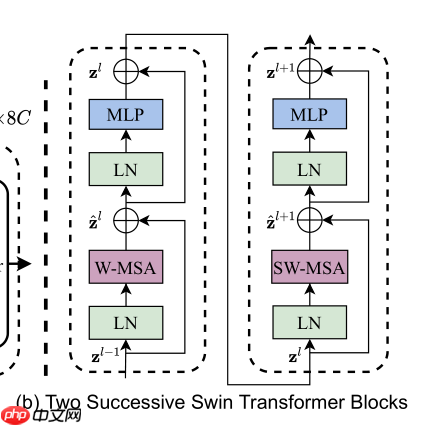

而本文精彩的地方,是针对分割后的window,进行重组,加强网络特征提取能力

? ? ? ?window分割后,分割的边缘失去了整体信息,网络更多关注window的中心部分,而边缘提供的信息有限,通过重组(一般是在第二个transformer blocks)进行更强的特征提取

代码构造

paddle没有torch一些api,需要自己定义

一部分代码参考timm库 :https://github.com/rwightman/pytorch-image-models

- torch.masked_fill == masked_fill

- torch.transpose == swapdim

- to_2tuple == 参考timm

- DroupPath == 参考timm

- Identity == 参考torch

import paddleimport paddle.nn as nnfrom itertools import repeatdef masked_fill(tensor, mask, value): cover = paddle.full_like(tensor, value) out = paddle.where(mask, tensor, cover) return outdef swapdim(x,num1,num2): a=list(range(len(x.shape))) a[num1], a[num2] = a[num2], a[num1] return x.transpose(a)def to_2tuple(x): return tuple(repeat(x, 2))def drop_path(x, drop_prob = 0., training = False): if drop_prob == 0. or not training: return x keep_prob = 1 - drop_prob shape = (x.shape[0],) + (1,) * (x.ndim - 1) random_tensor = paddle.to_tensor(keep_prob) + paddle.rand(shape) random_tensor = paddle.floor(random_tensor) output = x.divide(keep_prob) * random_tensor return outputclass DropPath(nn.Layer): def __init__(self, drop_prob=None): super(DropPath, self).__init__() self.drop_prob = drop_prob def forward(self, x): return drop_path(x, self.drop_prob, self.training)class Identity(nn.Layer): def __init__(self, *args, **kwargs): super(Identity, self).__init__() def forward(self, input): return input登录后复制 ? ?

经典的MLP

- MLP模块 由PLA推广而来

class Mlp(nn.Layer): def __init__(self, in_features, hidden_features=None, out_features=None, act_layer=nn.GELU, drop=0.): super().__init__() out_features = out_features or in_features hidden_features = hidden_features or in_features self.fc1 = nn.Linear(in_features, hidden_features) self.act = act_layer() self.fc2 = nn.Linear(hidden_features, out_features) self.drop = nn.Dropout(drop) def forward(self, x): x = self.fc1(x) x = self.act(x) x = self.drop(x) x = self.fc2(x) x = self.drop(x) return x登录后复制 ? ?

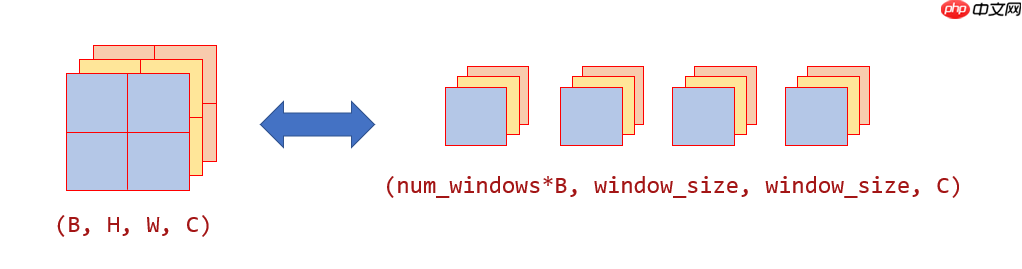

window的划分与合并

? ? ? ?window_partition是划分,window_reverse是合并

In [4]def window_partition(x, window_size): ”“” Args: x: (B, H, W, C) window_size (int): window size Returns: windows: (num_windows*B, window_size, window_size, C) “”“ B, H, W, C = x.shape x = x.reshape([B, H // window_size, window_size, W // window_size, window_size, C]) windows = x.transpose([0, 1, 3, 2, 4, 5]).reshape([-1, window_size, window_size, C]) return windowsdef window_reverse(windows, window_size, H, W): ”“” Args: windows: (num_windows*B, window_size, window_size, C) window_size (int): Window size H (int): Height of image W (int): Width of image Returns: x: (B, H, W, C) “”“ B = int(windows.shape[0] / (H * W / window_size / window_size)) x = windows.reshape([B, H // window_size, W // window_size, window_size, window_size, -1]) x = x.transpose([0, 1, 3, 2, 4, 5]).reshape([B, H, W, -1]) return x登录后复制 ? ?

W-MSA构建

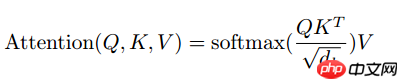

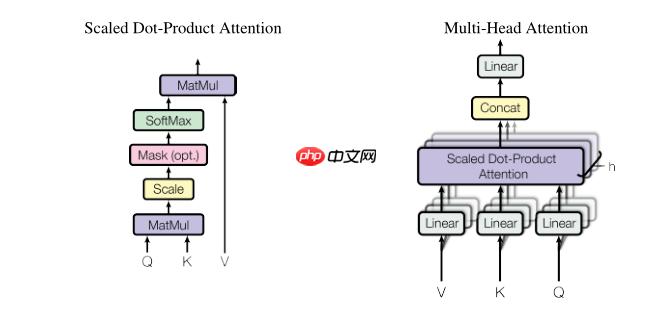

W-MSA(Window based multi-head self attention),它支持带shifted和不带shifted,要想深入理解这个,先从attention讲起

Attention

? ? ? ? ? ? ? ?- 计算复杂度小

- 大量并行运算

- 更好学习远距离依赖

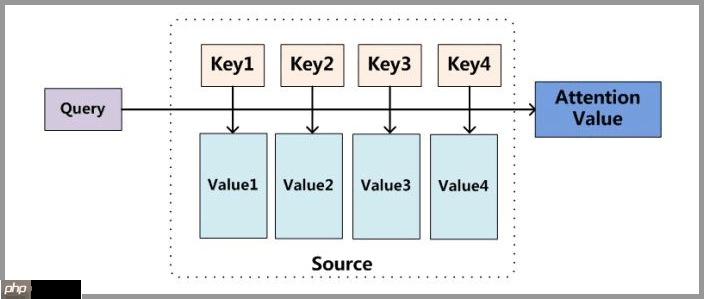

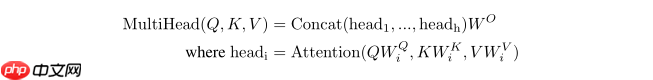

Multi-Head Self-attention

多头注意力(Multi-head Attention)机制是当前大行其道的Transformer、BERT等模型中的核心组件

? ? ? ?将模型分为多个头,形成多个子空间,可以让模型去关注不同方面的信息

? ? ? ?- paper:Attention is all you need

- num_heads:多注意力机制的头数

- attn_mask:用于限制attention中每个位置能看到的内容

注意,这一部分是本论文的精华,想要了解的同学必须要看懂源代码

In [5]class WindowAttention(nn.Layer): ”“” Window based multi-head self attention (W-MSA) module with relative position bias. It supports both of shifted and non-shifted window. Args: dim (int): Number of input channels. window_size (tuple[int]): The height and width of the window. num_heads (int): Number of attention heads. qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True qk_scale (float | None, optional): Override default qk scale of head_dim ** -0.5 if set attn_drop (float, optional): Dropout ratio of attention weight. Default: 0.0 proj_drop (float, optional): Dropout ratio of output. Default: 0.0 “”“ def __init__(self, dim, window_size, num_heads, qkv_bias=True, qk_scale=None, attn_drop=0., proj_drop=0.): super().__init__() self.dim = dim self.window_size = window_size # Wh, Ww self.num_heads = num_heads head_dim = dim // num_heads self.scale = qk_scale or head_dim ** -0.5 # define a parameter table of relative position bias relative_position_bias_table = self.create_parameter( shape=((2 * window_size[0] - 1) * (2 * window_size[1] - 1), num_heads), default_initializer=nn.initializer.Constant(value=0)) # 2*Wh-1 * 2*Ww-1, nH self.add_parameter(”relative_position_bias_table“, relative_position_bias_table) # get pair-wise relative position index for each token inside the window coords_h = paddle.arange(self.window_size[0]) coords_w = paddle.arange(self.window_size[1]) coords = paddle.stack(paddle.meshgrid([coords_h, coords_w])) # 2, Wh, Ww coords_flatten = paddle.flatten(coords, 1) # 2, Wh*Ww relative_coords = coords_flatten.unsqueeze(-1) - coords_flatten.unsqueeze(1) # 2, Wh*Ww, Wh*Ww relative_coords = relative_coords.transpose([1, 2, 0]) # Wh*Ww, Wh*Ww, 2 relative_coords[:, :, 0] += self.window_size[0] - 1 # shift to start from 0 relative_coords[:, :, 1] += self.window_size[1] - 1 relative_coords[:, :, 0] *= 2 * self.window_size[1] - 1 self.relative_position_index = relative_coords.sum(-1) # Wh*Ww, Wh*Ww self.register_buffer(”relative_position_index“, self.relative_position_index) self.qkv = nn.Linear(dim, dim * 3, bias_attr=qkv_bias) self.attn_drop = nn.Dropout(attn_drop) self.proj = nn.Linear(dim, dim) self.proj_drop = nn.Dropout(proj_drop) self.softmax = nn.Softmax(axis=-1) def forward(self, x, mask=None): ”“” Args: x: input features with shape of (num_windows*B, N, C) mask: (0/-inf) mask with shape of (num_windows, Wh*Ww, Wh*Ww) or None “”“ B_, N, C = x.shape qkv = self.qkv(x).reshape([B_, N, 3, self.num_heads, C // self.num_heads]).transpose([2, 0, 3, 1, 4]) q, k, v = qkv[0], qkv[1], qkv[2] # make torchscript happy (cannot use tensor as tuple) q = q * self.scale attn = q @ swapdim(k ,-2, -1) relative_position_bias = paddle.index_select(self.relative_position_bias_table, self.relative_position_index.reshape((-1,)),axis=0).reshape((self.window_size[0] * self.window_size[1],self.window_size[0] * self.window_size[1], -1)) relative_position_bias = relative_position_bias.transpose([2, 0, 1]) # nH, Wh*Ww, Wh*Ww attn = attn + relative_position_bias.unsqueeze(0) if mask is not None: nW = mask.shape[0] attn = attn.reshape([B_ // nW, nW, self.num_heads, N, N]) + mask.unsqueeze(1).unsqueeze(0) attn = attn.reshape([-1, self.num_heads, N, N]) attn = self.softmax(attn) else: attn = self.softmax(attn) attn = self.attn_drop(attn) x = swapdim((attn @ v),1, 2).reshape([B_, N, C]) x = self.proj(x) x = self.proj_drop(x) return x登录后复制 ? ?

Block构建与patch merging构建

? ? ? ?前面不做window shifted,后面做window shifted,这样做的好处可以提取较强的语义特征

In [6]class SwinTransformerBlock(nn.Layer): ”“” Swin Transformer Block. Args: dim (int): Number of input channels. input_resolution (tuple[int]): Input resulotion. num_heads (int): Number of attention heads. window_size (int): Window size. shift_size (int): Shift size for SW-MSA. mlp_ratio (float): Ratio of mlp hidden dim to embedding dim. qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True qk_scale (float | None, optional): Override default qk scale of head_dim ** -0.5 if set. drop (float, optional): Dropout rate. Default: 0.0 attn_drop (float, optional): Attention dropout rate. Default: 0.0 drop_path (float, optional): Stochastic depth rate. Default: 0.0 act_layer (nn.Module, optional): Activation layer. Default: nn.GELU norm_layer (nn.Module, optional): Normalization layer. Default: nn.LayerNorm “”“ def __init__(self, dim, input_resolution, num_heads, window_size=7, shift_size=0, mlp_ratio=4., qkv_bias=True, qk_scale=None, drop=0., attn_drop=0., drop_path=0., act_layer=nn.GELU, norm_layer=nn.LayerNorm): super().__init__() self.dim = dim self.input_resolution = input_resolution self.num_heads = num_heads self.window_size = window_size self.shift_size = shift_size self.mlp_ratio = mlp_ratio if min(self.input_resolution) <= self.window_size: # if window size is larger than input resolution, we don't partition windows self.shift_size = 0 self.window_size = min(self.input_resolution) assert 0 <= self.shift_size < self.window_size, ”shift_size must in 0-window_size“ self.norm1 = norm_layer(dim) self.attn = WindowAttention( dim, window_size=to_2tuple(self.window_size), num_heads=num_heads, qkv_bias=qkv_bias, qk_scale=qk_scale, attn_drop=attn_drop, proj_drop=drop) self.drop_path = DropPath(drop_path) if drop_path > 0. else Identity() self.norm2 = norm_layer(dim) mlp_hidden_dim = int(dim * mlp_ratio) self.mlp = Mlp(in_features=dim, hidden_features=mlp_hidden_dim, act_layer=act_layer, drop=drop) if self.shift_size > 0: # calculate attention mask for SW-MSA H, W = self.input_resolution img_mask = paddle.zeros((1, H, W, 1)) # 1 H W 1 h_slices = (slice(0, -self.window_size), slice(-self.window_size, -self.shift_size), slice(-self.shift_size, None)) w_slices = (slice(0, -self.window_size), slice(-self.window_size, -self.shift_size), slice(-self.shift_size, None)) cnt = 0 for h in h_slices: for w in w_slices: img_mask[:, h, w, :] = cnt cnt += 1 mask_windows = window_partition(img_mask, self.window_size) # nW, window_size, window_size, 1 mask_windows = mask_windows.reshape([-1, self.window_size * self.window_size]) attn_mask = mask_windows.unsqueeze(1) - mask_windows.unsqueeze(2) attn_mask = masked_fill(attn_mask, attn_mask == 0, float(-100.0)) attn_mask = masked_fill(attn_mask, attn_mask != 0, float(0.0)) else: attn_mask = None self.register_buffer(”attn_mask“, attn_mask) def forward(self, x): H, W = self.input_resolution B, L, C = x.shape assert L == H * W, ”input feature has wrong size“ shortcut = x x = self.norm1(x) x = x.reshape([B, H, W, C]) # cyclic shift if self.shift_size > 0: shifted_x = paddle.roll(x, shifts=(-self.shift_size, -self.shift_size), axis=(1, 2)) else: shifted_x = x # partition windows x_windows = window_partition(shifted_x, self.window_size) # nW*B, window_size, window_size, C x_windows = x_windows.reshape([-1, self.window_size * self.window_size, C]) # nW*B, window_size*window_size, C # W-MSA/SW-MSA attn_windows = self.attn(x_windows, mask=self.attn_mask) # nW*B, window_size*window_size, C # merge windows attn_windows = attn_windows.reshape([-1, self.window_size, self.window_size, C]) shifted_x = window_reverse(attn_windows, self.window_size, H, W) # B H' W' C # reverse cyclic shift if self.shift_size > 0: x = paddle.roll(shifted_x, shifts=(self.shift_size, self.shift_size), axis=(1, 2)) else: x = shifted_x x = x.reshape([B, H * W, C]) # FFN x = shortcut + self.drop_path(x) x = x + self.drop_path(self.mlp(self.norm2(x))) return xclass PatchMerging(nn.Layer): ”“” Patch Merging Layer. Args: input_resolution (tuple[int]): Resolution of input feature. dim (int): Number of input channels. norm_layer (nn.Module, optional): Normalization layer. Default: nn.LayerNorm “”“ def __init__(self, input_resolution, dim, norm_layer=nn.LayerNorm): super().__init__() self.input_resolution = input_resolution self.dim = dim self.reduction = nn.Linear(4 * dim, 2 * dim, bias_attr=False) self.norm = norm_layer(4 * dim) def forward(self, x): ”“” x: B, H*W, C “”“ H, W = self.input_resolution B, L, C = x.shape assert L == H * W, ”input feature has wrong size“ assert H % 2 == 0 and W % 2 == 0, f”x size ({H}*{W}) are not even.“ x = x.reshape([B, H, W, C]) x0 = x[:, 0::2, 0::2, :] # B H/2 W/2 C x1 = x[:, 1::2, 0::2, :] # B H/2 W/2 C x2 = x[:, 0::2, 1::2, :] # B H/2 W/2 C x3 = x[:, 1::2, 1::2, :] # B H/2 W/2 C x = paddle.concat([x0, x1, x2, x3], -1) # B H/2 W/2 4*C x = x.reshape([B, -1, 4 * C]) # B H/2*W/2 4*C x = self.norm(x) x = self.reduction(x) return x登录后复制 ? ?

将block和merging融合

In [7]class BasicLayer(nn.Layer): ”“” A basic Swin Transformer layer for one stage. Args: dim (int): Number of input channels. input_resolution (tuple[int]): Input resolution. depth (int): Number of blocks. num_heads (int): Number of attention heads. window_size (int): Local window size. mlp_ratio (float): Ratio of mlp hidden dim to embedding dim. qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True qk_scale (float | None, optional): Override default qk scale of head_dim ** -0.5 if set. drop (float, optional): Dropout rate. Default: 0.0 attn_drop (float, optional): Attention dropout rate. Default: 0.0 drop_path (float | tuple[float], optional): Stochastic depth rate. Default: 0.0 norm_layer (nn.Module, optional): Normalization layer. Default: nn.LayerNorm downsample (nn.Module | None, optional): Downsample layer at the end of the layer. Default: None use_checkpoint (bool): Whether to use checkpointing to save memory. Default: False. “”“ def __init__(self, dim, input_resolution, depth, num_heads, window_size, mlp_ratio=4., qkv_bias=True, qk_scale=None, drop=0., attn_drop=0., drop_path=0., norm_layer=nn.LayerNorm, downsample=None): super().__init__() self.dim = dim self.input_resolution = input_resolution self.depth = depth # build blocks self.blocks = nn.LayerList([ SwinTransformerBlock(dim=dim, input_resolution=input_resolution, num_heads=num_heads, window_size=window_size, shift_size=0 if (i % 2 == 0) else window_size // 2, mlp_ratio=mlp_ratio, qkv_bias=qkv_bias, qk_scale=qk_scale, drop=drop, attn_drop=attn_drop, drop_path=drop_path[i] if isinstance(drop_path, list) else drop_path, norm_layer=norm_layer) for i in range(depth)]) # patch merging layer if downsample is not None: self.downsample = downsample(input_resolution, dim=dim, norm_layer=norm_layer) else: self.downsample = None def forward(self, x): for blk in self.blocks: x = blk(x) if self.downsample is not None: x = self.downsample(x) return xclass PatchEmbed(nn.Layer): ”“” Image to Patch Embedding Args: img_size (int): Image size. Default: 224. patch_size (int): Patch token size. Default: 4. in_chans (int): Number of input image channels. Default: 3. embed_dim (int): Number of linear projection output channels. Default: 96. norm_layer (nn.Module, optional): Normalization layer. Default: None “”“ def __init__(self, img_size=224, patch_size=4, in_chans=3, embed_dim=96, norm_layer=None): super().__init__() img_size = to_2tuple(img_size) patch_size = to_2tuple(patch_size) patches_resolution = [img_size[0] // patch_size[0], img_size[1] // patch_size[1]] self.img_size = img_size self.patch_size = patch_size self.patches_resolution = patches_resolution self.num_patches = patches_resolution[0] * patches_resolution[1] self.in_chans = in_chans self.embed_dim = embed_dim self.proj = nn.Conv2D(in_chans, embed_dim, kernel_size=patch_size, stride=patch_size) if norm_layer is not None: self.norm = norm_layer(embed_dim) else: self.norm = None def forward(self, x): B, C, H, W = x.shape # FIXME look at relaxing size constraints assert H == self.img_size[0] and W == self.img_size[1], f”Input image size ({H}*{W}) doesn't match model ({self.img_size[0]}*{self.img_size[1]}).“ x = swapdim(self.proj(x).flatten(2), 1, 2) # B Ph*Pw C if self.norm is not None: x = self.norm(x) return x登录后复制 ? ?

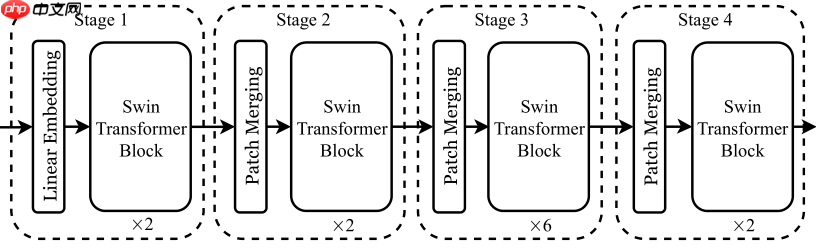

模型最终构造

? ? ? ?这一部分就是backbone的搭建了,不同backbone搭配方式也不同,这次说一下为什么倒数第二个stage要比其他三个多,因为stage0,stage1部输入的图像尺寸大,过多增加层数会造成运算增加,而在stage2输入的图像尺寸小,对运算开销小,方便提取高层语义,最后的stage3虽然输入空间维度小,但是Channel过大,会带来不小的计算开销,不如把计算资源分配给stage2,这也是ResNet经典的思想

- swin tiny:[ 2, 2, 6, 2 ]

- swin samll:[ 2, 2, 18, 2 ]

- swin base: [ 2, 2, 18, 2 ]

- swin large:[ 2, 2, 18, 2 ]

class SwinTransformer(nn.Layer): ”“” Swin Transformer A PyTorch impl of : `Swin Transformer: Hierarchical Vision Transformer using Shifted Windows` - https://arxiv.org/pdf/2103.14030 Args: img_size (int | tuple(int)): Input image size. Default 224 patch_size (int | tuple(int)): Patch size. Default: 4 in_chans (int): Number of input image channels. Default: 3 num_classes (int): Number of classes for classification head. Default: 1000 embed_dim (int): Patch embedding dimension. Default: 96 depths (tuple(int)): Depth of each Swin Transformer layer. num_heads (tuple(int)): Number of attention heads in different layers. window_size (int): Window size. Default: 7 mlp_ratio (float): Ratio of mlp hidden dim to embedding dim. Default: 4 qkv_bias (bool): If True, add a learnable bias to query, key, value. Default: True qk_scale (float): Override default qk scale of head_dim ** -0.5 if set. Default: None drop_rate (float): Dropout rate. Default: 0 attn_drop_rate (float): Attention dropout rate. Default: 0 drop_path_rate (float): Stochastic depth rate. Default: 0.1 norm_layer (nn.Module): Normalization layer. Default: nn.LayerNorm. ape (bool): If True, add absolute position embedding to the patch embedding. Default: False patch_norm (bool): If True, add normalization after patch embedding. Default: True use_checkpoint (bool): Whether to use checkpointing to save memory. Default: False “”“ def __init__(self, img_size=224, patch_size=4, in_chans=3, num_classes=1000, embed_dim=96, depths=[2, 2, 6, 2], num_heads=[3, 6, 12, 24], window_size=7, mlp_ratio=4., qkv_bias=True, qk_scale=None, drop_rate=0., attn_drop_rate=0., drop_path_rate=0.1, norm_layer=nn.LayerNorm, ape=False, patch_norm=True, **kwargs): super().__init__() self.num_classes = num_classes self.num_layers = len(depths) self.embed_dim = embed_dim self.ape = ape self.patch_norm = patch_norm self.num_features = int(embed_dim * 2 ** (self.num_layers - 1)) self.mlp_ratio = mlp_ratio # split image into non-overlapping patches self.patch_embed = PatchEmbed( img_size=img_size, patch_size=patch_size, in_chans=in_chans, embed_dim=embed_dim, norm_layer=norm_layer if self.patch_norm else None) num_patches = self.patch_embed.num_patches patches_resolution = self.patch_embed.patches_resolution self.patches_resolution = patches_resolution # absolute position embedding if self.ape: self.absolute_pos_embed = self.create_parameter(shape=(1, num_patches, embed_dim),default_initializer=nn.initializer.Constant(value=0)) self.add_parameter(”absolute_pos_embed“, self.absolute_pos_embed) self.pos_drop = nn.Dropout(p=drop_rate) # stochastic depth dpr = [x for x in paddle.linspace(0, drop_path_rate, sum(depths))] # stochastic depth decay rule # build layers self.layers = nn.LayerList() for i_layer in range(self.num_layers): layer = BasicLayer(dim=int(embed_dim * 2 ** i_layer), input_resolution=(patches_resolution[0] // (2 ** i_layer), patches_resolution[1] // (2 ** i_layer)), depth=depths[i_layer], num_heads=num_heads[i_layer], window_size=window_size, mlp_ratio=self.mlp_ratio, qkv_bias=qkv_bias, qk_scale=qk_scale, drop=drop_rate, attn_drop=attn_drop_rate, drop_path=dpr[sum(depths[:i_layer]):sum(depths[:i_layer + 1])], norm_layer=norm_layer, downsample=PatchMerging if (i_layer < self.num_layers - 1) else None ) self.layers.append(layer) self.norm = norm_layer(self.num_features) self.avgpool = nn.AdaptiveAvgPool1D(1) self.head = nn.Linear(self.num_features, num_classes) if num_classes > 0 else Identity() def forward_features(self, x): x = self.patch_embed(x) if self.ape: x = x + self.absolute_pos_embed x = self.pos_drop(x) for layer in self.layers: x = layer(x) x = self.norm(x) # B L C x = self.avgpool(swapdim(x,1, 2)) # B C 1 x = paddle.flatten(x, 1) return x def forward(self, x): x = self.forward_features(x) x = self.head(x) return x登录后复制 ? ?

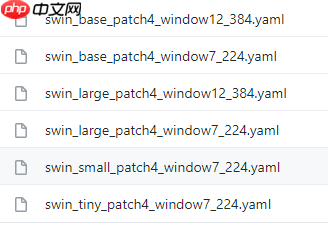

模型大小定义

官方发布模型如下

? ? ? ?In [9]def swin_tiny_window7_224(**kwargs): model = SwinTransformer(img_size = 224, embed_dim = 96, depths = [ 2, 2, 6, 2 ], num_heads = [ 3, 6, 12, 24 ], window_size = 7, drop_path_rate=0.2, **kwargs) return modeldef swin_small_window7_224(**kwargs): model = SwinTransformer(img_size = 224, embed_dim = 96, depths = [ 2, 2, 18, 2 ], num_heads = [ 3, 6, 12, 24 ], window_size = 7, drop_path_rate=0.3, **kwargs) return modeldef swin_base_window7_224(**kwargs): model = SwinTransformer(img_size = 224, embed_dim = 128, depths = [ 2, 2, 18, 2 ], num_heads = [ 4, 8, 16, 32 ], window_size = 7, drop_path_rate=0.5, **kwargs) return modeldef swin_large_window7_224(**kwargs): model = SwinTransformer(img_size = 224, embed_dim = 192, depths = [ 2, 2, 18, 2 ], num_heads = [ 6, 12, 24, 48 ], window_size = 7, **kwargs) return modeldef swin_base_window12_384(**kwargs): model = SwinTransformer(img_size = 384, embed_dim = 128, depths = [ 2, 2, 18, 2 ], num_heads = [ 4, 8, 16, 32 ], window_size = 12, **kwargs) return modeldef swin_large_window12_384(**kwargs): model = SwinTransformer(img_size = 384, embed_dim = 192, depths = [ 2, 2, 18, 2 ], num_heads = [ 6, 12, 24, 48 ], window_size = 12, **kwargs) return model登录后复制 ? ?In [10]

model = swin_tiny_window7_224(num_classes = 10)model = paddle.Model(model)model.summary((1,3,224,224))登录后复制 ? ? ? ?

----------------------------------------------------------------------------------- Layer (type) Input Shape Output Shape Param # =================================================================================== Conv2D-1 [[1, 3, 224, 224]] [1, 96, 56, 56] 4,704 LayerNorm-1 [[1, 3136, 96]] [1, 3136, 96] 192 PatchEmbed-1 [[1, 3, 224, 224]] [1, 3136, 96] 0 Dropout-1 [[1, 3136, 96]] [1, 3136, 96] 0 LayerNorm-2 [[1, 3136, 96]] [1, 3136, 96] 192 Linear-1 [[64, 49, 96]] [64, 49, 288] 27,936 Softmax-1 [[64, 3, 49, 49]] [64, 3, 49, 49] 0 Dropout-2 [[64, 3, 49, 49]] [64, 3, 49, 49] 0 Linear-2 [[64, 49, 96]] [64, 49, 96] 9,312 Dropout-3 [[64, 49, 96]] [64, 49, 96] 0 WindowAttention-1 [[64, 49, 96]] [64, 49, 96] 507 Identity-1 [[1, 3136, 96]] [1, 3136, 96] 0 LayerNorm-3 [[1, 3136, 96]] [1, 3136, 96] 192 Linear-3 [[1, 3136, 96]] [1, 3136, 384] 37,248 GELU-1 [[1, 3136, 384]] [1, 3136, 384] 0 Dropout-4 [[1, 3136, 96]] [1, 3136, 96] 0 Linear-4 [[1, 3136, 384]] [1, 3136, 96] 36,960 Mlp-1 [[1, 3136, 96]] [1, 3136, 96] 0 SwinTransformerBlock-1 [[1, 3136, 96]] [1, 3136, 96] 0 LayerNorm-4 [[1, 3136, 96]] [1, 3136, 96] 192 Linear-5 [[64, 49, 96]] [64, 49, 288] 27,936 Softmax-2 [[64, 3, 49, 49]] [64, 3, 49, 49] 0 Dropout-5 [[64, 3, 49, 49]] [64, 3, 49, 49] 0 Linear-6 [[64, 49, 96]] [64, 49, 96] 9,312 Dropout-6 [[64, 49, 96]] [64, 49, 96] 0 WindowAttention-2 [[64, 49, 96]] [64, 49, 96] 507 DropPath-1 [[1, 3136, 96]] [1, 3136, 96] 0 LayerNorm-5 [[1, 3136, 96]] [1, 3136, 96] 192 Linear-7 [[1, 3136, 96]] [1, 3136, 384] 37,248 GELU-2 [[1, 3136, 384]] [1, 3136, 384] 0 Dropout-7 [[1, 3136, 96]] [1, 3136, 96] 0 Linear-8 [[1, 3136, 384]] [1, 3136, 96] 36,960 Mlp-2 [[1, 3136, 96]] [1, 3136, 96] 0 SwinTransformerBlock-2 [[1, 3136, 96]] [1, 3136, 96] 0 LayerNorm-6 [[1, 784, 384]] [1, 784, 384] 768 Linear-9 [[1, 784, 384]] [1, 784, 192] 73,728 PatchMerging-1 [[1, 3136, 96]] [1, 784, 192] 0 BasicLayer-1 [[1, 3136, 96]] [1, 784, 192] 0 LayerNorm-7 [[1, 784, 192]] [1, 784, 192] 384 Linear-10 [[16, 49, 192]] [16, 49, 576] 111,168 Softmax-3 [[16, 6, 49, 49]] [16, 6, 49, 49] 0 Dropout-8 [[16, 6, 49, 49]] [16, 6, 49, 49] 0 Linear-11 [[16, 49, 192]] [16, 49, 192] 37,056 Dropout-9 [[16, 49, 192]] [16, 49, 192] 0 WindowAttention-3 [[16, 49, 192]] [16, 49, 192] 1,014 DropPath-2 [[1, 784, 192]] [1, 784, 192] 0 LayerNorm-8 [[1, 784, 192]] [1, 784, 192] 384 Linear-12 [[1, 784, 192]] [1, 784, 768] 148,224 GELU-3 [[1, 784, 768]] [1, 784, 768] 0 Dropout-10 [[1, 784, 192]] [1, 784, 192] 0 Linear-13 [[1, 784, 768]] [1, 784, 192] 147,648 Mlp-3 [[1, 784, 192]] [1, 784, 192] 0 SwinTransformerBlock-3 [[1, 784, 192]] [1, 784, 192] 0 LayerNorm-9 [[1, 784, 192]] [1, 784, 192] 384 Linear-14 [[16, 49, 192]] [16, 49, 576] 111,168 Softmax-4 [[16, 6, 49, 49]] [16, 6, 49, 49] 0 Dropout-11 [[16, 6, 49, 49]] [16, 6, 49, 49] 0 Linear-15 [[16, 49, 192]] [16, 49, 192] 37,056 Dropout-12 [[16, 49, 192]] [16, 49, 192] 0 WindowAttention-4 [[16, 49, 192]] [16, 49, 192] 1,014 DropPath-3 [[1, 784, 192]] [1, 784, 192] 0 LayerNorm-10 [[1, 784, 192]] [1, 784, 192] 384 Linear-16 [[1, 784, 192]] [1, 784, 768] 148,224 GELU-4 [[1, 784, 768]] [1, 784, 768] 0 Dropout-13 [[1, 784, 192]] [1, 784, 192] 0 Linear-17 [[1, 784, 768]] [1, 784, 192] 147,648 Mlp-4 [[1, 784, 192]] [1, 784, 192] 0 SwinTransformerBlock-4 [[1, 784, 192]] [1, 784, 192] 0 LayerNorm-11 [[1, 196, 768]] [1, 196, 768] 1,536 Linear-18 [[1, 196, 768]] [1, 196, 384] 294,912 PatchMerging-2 [[1, 784, 192]] [1, 196, 384] 0 BasicLayer-2 [[1, 784, 192]] [1, 196, 384] 0 LayerNorm-12 [[1, 196, 384]] [1, 196, 384] 768 Linear-19 [[4, 49, 384]] [4, 49, 1152] 443,520 Softmax-5 [[4, 12, 49, 49]] [4, 12, 49, 49] 0 Dropout-14 [[4, 12, 49, 49]] [4, 12, 49, 49] 0 Linear-20 [[4, 49, 384]] [4, 49, 384] 147,840 Dropout-15 [[4, 49, 384]] [4, 49, 384] 0 WindowAttention-5 [[4, 49, 384]] [4, 49, 384] 2,028 DropPath-4 [[1, 196, 384]] [1, 196, 384] 0 LayerNorm-13 [[1, 196, 384]] [1, 196, 384] 768 Linear-21 [[1, 196, 384]] [1, 196, 1536] 591,360 GELU-5 [[1, 196, 1536]] [1, 196, 1536] 0 Dropout-16 [[1, 196, 384]] [1, 196, 384] 0 Linear-22 [[1, 196, 1536]] [1, 196, 384] 590,208 Mlp-5 [[1, 196, 384]] [1, 196, 384] 0 SwinTransformerBlock-5 [[1, 196, 384]] [1, 196, 384] 0 LayerNorm-14 [[1, 196, 384]] [1, 196, 384] 768 Linear-23 [[4, 49, 384]] [4, 49, 1152] 443,520 Softmax-6 [[4, 12, 49, 49]] [4, 12, 49, 49] 0 Dropout-17 [[4, 12, 49, 49]] [4, 12, 49, 49] 0 Linear-24 [[4, 49, 384]] [4, 49, 384] 147,840 Dropout-18 [[4, 49, 384]] [4, 49, 384] 0 WindowAttention-6 [[4, 49, 384]] [4, 49, 384] 2,028 DropPath-5 [[1, 196, 384]] [1, 196, 384] 0 LayerNorm-15 [[1, 196, 384]] [1, 196, 384] 768 Linear-25 [[1, 196, 384]] [1, 196, 1536] 591,360 GELU-6 [[1, 196, 1536]] [1, 196, 1536] 0 Dropout-19 [[1, 196, 384]] [1, 196, 384] 0 Linear-26 [[1, 196, 1536]] [1, 196, 384] 590,208 Mlp-6 [[1, 196, 384]] [1, 196, 384] 0 SwinTransformerBlock-6 [[1, 196, 384]] [1, 196, 384] 0 LayerNorm-16 [[1, 196, 384]] [1, 196, 384] 768 Linear-27 [[4, 49, 384]] [4, 49, 1152] 443,520 Softmax-7 [[4, 12, 49, 49]] [4, 12, 49, 49] 0 Dropout-20 [[4, 12, 49, 49]] [4, 12, 49, 49] 0 Linear-28 [[4, 49, 384]] [4, 49, 384] 147,840 Dropout-21 [[4, 49, 384]] [4, 49, 384] 0 WindowAttention-7 [[4, 49, 384]] [4, 49, 384] 2,028 DropPath-6 [[1, 196, 384]] [1, 196, 384] 0 LayerNorm-17 [[1, 196, 384]] [1, 196, 384] 768 Linear-29 [[1, 196, 384]] [1, 196, 1536] 591,360 GELU-7 [[1, 196, 1536]] [1, 196, 1536] 0 Dropout-22 [[1, 196, 384]] [1, 196, 384] 0 Linear-30 [[1, 196, 1536]] [1, 196, 384] 590,208 Mlp-7 [[1, 196, 384]] [1, 196, 384] 0 SwinTransformerBlock-7 [[1, 196, 384]] [1, 196, 384] 0 LayerNorm-18 [[1, 196, 384]] [1, 196, 384] 768 Linear-31 [[4, 49, 384]] [4, 49, 1152] 443,520 Softmax-8 [[4, 12, 49, 49]] [4, 12, 49, 49] 0 Dropout-23 [[4, 12, 49, 49]] [4, 12, 49, 49] 0 Linear-32 [[4, 49, 384]] [4, 49, 384] 147,840 Dropout-24 [[4, 49, 384]] [4, 49, 384] 0 WindowAttention-8 [[4, 49, 384]] [4, 49, 384] 2,028 DropPath-7 [[1, 196, 384]] [1, 196, 384] 0 LayerNorm-19 [[1, 196, 384]] [1, 196, 384] 768 Linear-33 [[1, 196, 384]] [1, 196, 1536] 591,360 GELU-8 [[1, 196, 1536]] [1, 196, 1536] 0 Dropout-25 [[1, 196, 384]] [1, 196, 384] 0 Linear-34 [[1, 196, 1536]] [1, 196, 384] 590,208 Mlp-8 [[1, 196, 384]] [1, 196, 384] 0 SwinTransformerBlock-8 [[1, 196, 384]] [1, 196, 384] 0 LayerNorm-20 [[1, 196, 384]] [1, 196, 384] 768 Linear-35 [[4, 49, 384]] [4, 49, 1152] 443,520 Softmax-9 [[4, 12, 49, 49]] [4, 12, 49, 49] 0 Dropout-26 [[4, 12, 49, 49]] [4, 12, 49, 49] 0 Linear-36 [[4, 49, 384]] [4, 49, 384] 147,840 Dropout-27 [[4, 49, 384]] [4, 49, 384] 0 WindowAttention-9 [[4, 49, 384]] [4, 49, 384] 2,028 DropPath-8 [[1, 196, 384]] [1, 196, 384] 0 LayerNorm-21 [[1, 196, 384]] [1, 196, 384] 768 Linear-37 [[1, 196, 384]] [1, 196, 1536] 591,360 GELU-9 [[1, 196, 1536]] [1, 196, 1536] 0 Dropout-28 [[1, 196, 384]] [1, 196, 384] 0 Linear-38 [[1, 196, 1536]] [1, 196, 384] 590,208 Mlp-9 [[1, 196, 384]] [1, 196, 384] 0 SwinTransformerBlock-9 [[1, 196, 384]] [1, 196, 384] 0 LayerNorm-22 [[1, 196, 384]] [1, 196, 384] 768 Linear-39 [[4, 49, 384]] [4, 49, 1152] 443,520 Softmax-10 [[4, 12, 49, 49]] [4, 12, 49, 49] 0 Dropout-29 [[4, 12, 49, 49]] [4, 12, 49, 49] 0 Linear-40 [[4, 49, 384]] [4, 49, 384] 147,840 Dropout-30 [[4, 49, 384]] [4, 49, 384] 0 WindowAttention-10 [[4, 49, 384]] [4, 49, 384] 2,028 DropPath-9 [[1, 196, 384]] [1, 196, 384] 0 LayerNorm-23 [[1, 196, 384]] [1, 196, 384] 768 Linear-41 [[1, 196, 384]] [1, 196, 1536] 591,360 GELU-10 [[1, 196, 1536]] [1, 196, 1536] 0 Dropout-31 [[1, 196, 384]] [1, 196, 384] 0 Linear-42 [[1, 196, 1536]] [1, 196, 384] 590,208 Mlp-10 [[1, 196, 384]] [1, 196, 384] 0 SwinTransformerBlock-10 [[1, 196, 384]] [1, 196, 384] 0 LayerNorm-24 [[1, 49, 1536]] [1, 49, 1536] 3,072 Linear-43 [[1, 49, 1536]] [1, 49, 768] 1,179,648 PatchMerging-3 [[1, 196, 384]] [1, 49, 768] 0 BasicLayer-3 [[1, 196, 384]] [1, 49, 768] 0 LayerNorm-25 [[1, 49, 768]] [1, 49, 768] 1,536 Linear-44 [[1, 49, 768]] [1, 49, 2304] 1,771,776 Softmax-11 [[1, 24, 49, 49]] [1, 24, 49, 49] 0 Dropout-32 [[1, 24, 49, 49]] [1, 24, 49, 49] 0 Linear-45 [[1, 49, 768]] [1, 49, 768] 590,592 Dropout-33 [[1, 49, 768]] [1, 49, 768] 0 WindowAttention-11 [[1, 49, 768]] [1, 49, 768] 4,056 DropPath-10 [[1, 49, 768]] [1, 49, 768] 0 LayerNorm-26 [[1, 49, 768]] [1, 49, 768] 1,536 Linear-46 [[1, 49, 768]] [1, 49, 3072] 2,362,368 GELU-11 [[1, 49, 3072]] [1, 49, 3072] 0 Dropout-34 [[1, 49, 768]] [1, 49, 768] 0 Linear-47 [[1, 49, 3072]] [1, 49, 768] 2,360,064 Mlp-11 [[1, 49, 768]] [1, 49, 768] 0 SwinTransformerBlock-11 [[1, 49, 768]] [1, 49, 768] 0 LayerNorm-27 [[1, 49, 768]] [1, 49, 768] 1,536 Linear-48 [[1, 49, 768]] [1, 49, 2304] 1,771,776 Softmax-12 [[1, 24, 49, 49]] [1, 24, 49, 49] 0 Dropout-35 [[1, 24, 49, 49]] [1, 24, 49, 49] 0 Linear-49 [[1, 49, 768]] [1, 49, 768] 590,592 Dropout-36 [[1, 49, 768]] [1, 49, 768] 0 WindowAttention-12 [[1, 49, 768]] [1, 49, 768] 4,056 DropPath-11 [[1, 49, 768]] [1, 49, 768] 0 LayerNorm-28 [[1, 49, 768]] [1, 49, 768] 1,536 Linear-50 [[1, 49, 768]] [1, 49, 3072] 2,362,368 GELU-12 [[1, 49, 3072]] [1, 49, 3072] 0 Dropout-37 [[1, 49, 768]] [1, 49, 768] 0 Linear-51 [[1, 49, 3072]] [1, 49, 768] 2,360,064 Mlp-12 [[1, 49, 768]] [1, 49, 768] 0 SwinTransformerBlock-12 [[1, 49, 768]] [1, 49, 768] 0 BasicLayer-4 [[1, 49, 768]] [1, 49, 768] 0 LayerNorm-29 [[1, 49, 768]] [1, 49, 768] 1,536 AdaptiveAvgPool1D-1 [[1, 768, 49]] [1, 768, 1] 0 Linear-52 [[1, 768]] [1, 10] 7,690 ===================================================================================Total params: 27,527,044Trainable params: 27,527,044Non-trainable params: 0-----------------------------------------------------------------------------------Input size (MB): 0.57Forward/backward pass size (MB): 282.34Params size (MB): 105.01Estimated Total Size (MB): 387.92-----------------------------------------------------------------------------------登录后复制 ? ? ? ?

{'total_params': 27527044, 'trainable_params': 27527044}登录后复制 ? ? ? ? ? ? ? ?

用Cifar10训练

重要的事情说三遍:batch_size?一定要调小!调小!调小!不然会?cuda error (9)

- transformer网络拟合数据没有传统CNN快

- 读者可以自由调试网络

- 预训练模型可以去?Swin Transformer:层次化视觉 Transformer?这里要赞扬一下大佬的PPIM~推荐大家试一下

import paddle.vision.transforms as Tfrom paddle.vision.datasets import Cifar10#数据准备transform = T.Compose([ T.Resize(size=(224,224)), T.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225],data_format='HWC'), T.ToTensor()])train_dataset = Cifar10(mode='train', transform=transform)val_dataset = Cifar10(mode='test', transform=transform)model.prepare(optimizer=paddle.optimizer.SGD(learning_rate=0.001,parameters=model.parameters()), loss=paddle.nn.CrossEntropyLoss(), metrics=paddle.metric.Accuracy())vdl_callback = paddle.callbacks.VisualDL(log_dir='log') # 训练可视化model.fit( train_data=train_dataset, eval_data=val_dataset, batch_size=8, epochs=10, verbose=1, callbacks=vdl_callback # 训练可视化)登录后复制 ? ?

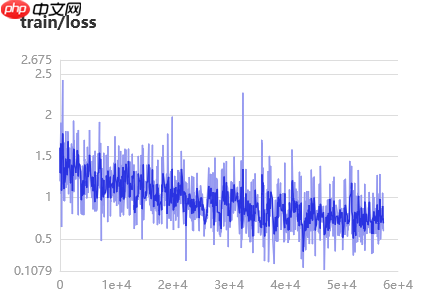

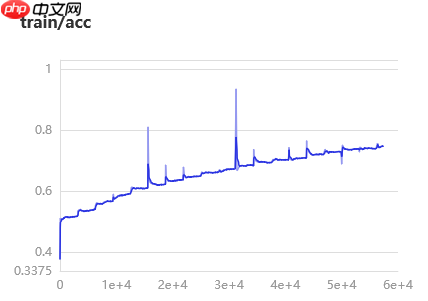

? ? ? ? ?- 我们可以看到该模型收敛相比传统CNN收敛十分慢,一个epoch需要15 min左右

- 收敛图像几乎呈直线增长,不同于传统CNN先快后慢

- 由于给的算力只有8小时,最高收敛到acc = 0.75左右,算力多的伙伴可以尝试一下

- 已在官方torch代码测试,loss和上述差不多

- 进一步说明传统CNN在这一方面(收敛性)强于transformer,如果有能力的同学可以加更多的identity魔改网络增加模型收敛性

来源:https://www.php.cn/faq/1414459.html

免责声明:文中图文均来自网络,如有侵权请联系删除,心愿游戏发布此文仅为传递信息,不代表心愿游戏认同其观点或证实其描述。

相关文章

更多-

- nef 格式图片降噪处理用什么工具 效果如何

- 时间:2025-07-29

-

- 邮箱长时间未登录被注销了能恢复吗?

- 时间:2025-07-29

-

- Outlook收件箱邮件不同步怎么办?

- 时间:2025-07-29

-

- 为什么客户端收邮件总是延迟?

- 时间:2025-07-29

-

- 一英寸在磁带宽度中是多少 老式设备规格

- 时间:2025-07-29

-

- 大卡和年龄的关系 不同年龄段热量需求

- 时间:2025-07-29

-

- jif 格式是 gif 的变体吗 现在还常用吗

- 时间:2025-07-29

-

- hdr 格式图片在显示器上能完全显示吗 普通显示器有局限吗

- 时间:2025-07-29

大家都在玩

大家都在看

更多-

- 辉烬武魂搭配推荐

- 时间:2025-11-09

-

- 辉烬知世配队攻略

- 时间:2025-11-09

-

- 二重螺旋耶尔与奥利弗谁厉害

- 时间:2025-11-09

-

- 有魅力比较帅的王者荣耀名字

- 时间:2025-11-09

-

- 二重螺旋丽蓓卡技能解析

- 时间:2025-11-09

-

- “原来心累可以写得这么刀”

- 时间:2025-11-09

-

- 二重螺旋梦魇残响

- 时间:2025-11-09

-

- 迫击炮游戏推荐2025

- 时间:2025-11-09